16S rRNA Sequencing Data Analysis: A Complete Beginner's Guide for Biomedical Researchers

This comprehensive guide demystifies 16S rRNA sequencing analysis for researchers and drug development professionals.

16S rRNA Sequencing Data Analysis: A Complete Beginner's Guide for Biomedical Researchers

Abstract

This comprehensive guide demystifies 16S rRNA sequencing analysis for researchers and drug development professionals. We cover the foundational concepts of microbial community profiling, provide a step-by-step walkthrough of modern bioinformatics pipelines (from raw reads to taxonomic tables), address common pitfalls and optimization strategies for robust results, and critically evaluate best practices for data validation and interpretation. Learn how to transform sequencing data into actionable insights for microbiome studies in clinical and therapeutic contexts.

What is 16S rRNA Sequencing? Unlocking the Microbial Universe for Drug Discovery

Within the foundational thesis of 16S rRNA sequencing data analysis, the selection of the molecular target is paramount. The 16S ribosomal RNA (rRNA) gene, encoding the RNA component of the 30S subunit of the prokaryotic ribosome, has served as the cornerstone of microbial phylogeny and taxonomy for decades. Its adoption as the "gold standard" is not accidental but is rooted in a convergence of evolutionarily conserved and variable properties, coupled with practical experimental utility. This guide delineates the technical rationale for its preeminence, current experimental paradigms, and essential analytical resources.

Core Properties of the 16S rRNA Gene

The gene's utility stems from its unique mosaic of functional constraint and evolutionary divergence, summarized in the table below.

Table 1: Key Properties of the 16S rRNA Gene Enabling Phylogenetic Analysis

| Property | Technical Description | Functional Implication for Phylogeny |

|---|---|---|

| Ubiquitous & Essential | Present in all bacteria and archaea; fundamental for protein synthesis. | Provides a universal phylogenetic framework for comparing all prokaryotes. |

| Functionally Constrained | High conservation in secondary and tertiary structure due to ribosome function. | Ensures homology, allowing for meaningful sequence alignment across vast evolutionary distances. |

| Evolutionarily Conserved | Contains nine "hypervariable regions" (V1-V9) interspersed with highly conserved regions. | Conserved regions enable universal PCR priming; variable regions provide phylogenetic signature. |

| Appropriate Length | ~1,550 base pairs in E. coli. | Long enough for robust phylogenetic inference, short enough for efficient sequencing. |

| Low Horizontal Gene Transfer (HGT) | As part of the core ribosomal operon, it is less subject to HGT than many protein-coding genes. | Evolutionary history reflects organismal lineage rather than sporadic gene acquisition. |

| Large Reference Database | Curated repositories like SILVA, Greengenes, and RDP contain millions of sequences. | Enables robust taxonomic assignment and novel sequence classification. |

Experimental Protocol: Standard 16S rRNA Gene Amplicon Sequencing

The prevailing method for community profiling involves amplifying and sequencing hypervariable regions.

Detailed Protocol:

1. Sample Lysis and DNA Extraction:

- Method: Use bead-beating (mechanical disruption) combined with chemical lysis (e.g., SDS, proteinase K) for robust breaking of diverse cell walls (Gram-positive, Gram-negative, spores).

- Purification: Clean DNA using spin-column or magnetic bead-based kits to remove PCR inhibitors (humic acids, salts, proteins).

- Quality Control: Quantify DNA using fluorometry (e.g., Qubit) and assess purity via 260/280 & 260/230 nm ratios.

2. PCR Amplification of Target Region:

- Primer Design: Use broad-coverage "universal" primer pairs flanking a specific hypervariable region (e.g., V3-V4: 341F/806R; V4: 515F/806R). Primer tails often include Illumina sequencing adapters.

- Reaction Setup: Use a high-fidelity, proofreading polymerase to minimize PCR errors. Include negative (no-template) controls.

- Cycling Conditions: Initial denaturation (95°C, 3 min); 25-35 cycles of: denaturation (95°C, 30s), annealing (55°C, 30s), extension (72°C, 60s); final extension (72°C, 5 min).

3. Amplicon Purification & Library Preparation:

- Clean-up: Remove primer dimers and non-specific products using magnetic beads (e.g., AMPure XP).

- Indexing PCR (Optional): If adapters were not included in the first PCR, a second, limited-cycle PCR adds dual indices and full sequencing adapters.

- Pooling & Normalization: Quantify individual libraries, normalize to equimolar concentration, and pool.

4. Sequencing:

- Platform: Primarily performed on Illumina MiSeq, iSeq, or NovaSeq platforms using paired-end chemistry (2x250 bp or 2x300 bp).

5. Bioinformatics Analysis:

- Demultiplexing: Assign reads to samples based on unique index combinations.

- Processing: Use pipelines (QIIME 2, mothur, DADA2) for quality filtering, denoising, chimera removal, and amplicon sequence variant (ASV) or operational taxonomic unit (OTU) clustering.

- Taxonomy Assignment: Classify sequences against reference databases (SILVA, GTDB).

- Downstream Analysis: Diversity analysis (alpha/beta), differential abundance testing, and phylogenetic tree construction.

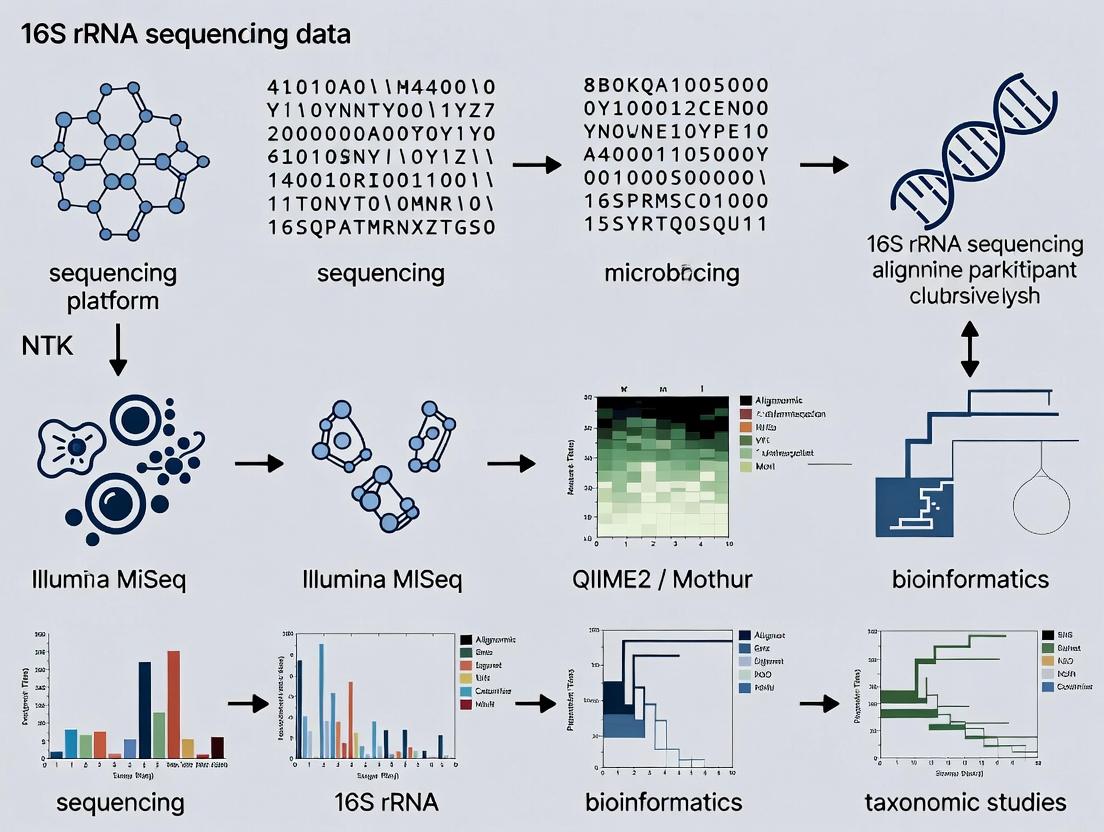

Diagram Title: 16S rRNA Amplicon Sequencing Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Reagents and Kits for 16S rRNA Sequencing Studies

| Item Category | Specific Example(s) | Function & Rationale |

|---|---|---|

| DNA Extraction Kit | DNeasy PowerSoil Pro Kit (QIAGEN), MagMAX Microbiome Kit (Thermo Fisher) | Standardized, high-yield isolation of inhibitor-free microbial DNA from complex samples (soil, stool). |

| High-Fidelity Polymerase | Q5 Hot Start High-Fidelity (NEB), KAPA HiFi HotStart ReadyMix (Roche) | Minimizes PCR errors during amplicon generation, crucial for accurate ASV calling. |

| Universal 16S Primers | 341F/806R (V3-V4), 515F/806R (V4), 27F/1492R (full-length) | Broad-coverage primers target conserved regions to amplify the desired hypervariable region from diverse taxa. |

| Library Prep Kit | Illumina 16S Metagenomic Sequencing Library Prep, Nextera XT Index Kit | Streamlines attachment of sequencing adapters and dual indices for multiplexing. |

| Magnetic Beads | AMPure XP Beads (Beckman Coulter) | For size-selective purification of PCR amplicons and final library clean-up. |

| Quantification Reagents | Qubit dsDNA HS Assay (Thermo Fisher), Library Quantification Kit (KAPA) | Accurate fluorometric quantification of DNA and final libraries for precise pooling. |

| Positive Control | ZymoBIOMICS Microbial Community Standard (Zymo Research) | Defined mock community of bacteria to validate entire workflow and bioinformatic pipeline performance. |

| Negative Control | Nuclease-Free Water | Identifies contamination introduced from reagents or laboratory environment. |

Limitations and Complementary Technologies

While definitive, 16S analysis has constraints. It provides taxonomic profiling, not a full functional capacity. Resolution is often limited to genus level, and PCR biases can distort abundance estimates. For higher resolution (strain-level) or functional insight, complementary technologies are employed, as summarized below.

Table 3: Complementary Microbial Community Analysis Methods

| Method | Target | Key Advantage Over 16S | Primary Limitation |

|---|---|---|---|

| Shotgun Metagenomics | All genomic DNA | Provides functional gene catalog and strain-level resolution; no PCR bias. | Higher cost, complexity, and host DNA contamination in low-biomass samples. |

| Metatranscriptomics | Total RNA (mRNA) | Reveals community-wide gene expression and active metabolic pathways. | Technically challenging, RNA instability, high cost. |

| Whole-Genome Sequencing (Isolates) | Pure culture genome | Gold standard for defining species/strain and precise functional annotation. | Requires culturing, which is not possible for many microbes. |

Diagram Title: Placing 16S rRNA Sequencing in the Methodological Landscape

The 16S rRNA gene remains the gold standard for microbial phylogeny due to its immutable evolutionary and practical virtues. It provides the essential, robust, and cost-effective first step in any microbiome study—definitively answering "who is there?" As a cornerstone thesis in microbial ecology, mastering its analysis is fundamental. While newer methods offer deeper functional insights, they build upon the phylogenetic scaffold that 16S sequencing reliably provides, ensuring its continued central role in research and drug development targeting microbial communities.

This guide details the core workflow of a 16S ribosomal RNA (rRNA) gene amplicon study, a fundamental technique in microbial ecology. Within the broader thesis on 16S rRNA sequencing data analysis basics, this document serves as the operational blueprint, connecting experimental design to the generation of interpretable data. The process transforms a biological sample into ecological insights, relying on a series of standardized yet evolving wet-lab and computational steps.

Core Workflow and Methodologies

Experimental Workflow Diagram

Diagram 1: 16S Amplicon Study Core Workflow

Detailed Experimental Protocols

Protocol 1: PCR Amplification of Hypervariable Regions

- Objective: To amplify target 16S rRNA gene regions (e.g., V3-V4) for sequencing.

- Reagents: Template genomic DNA, region-specific primers with Illumina adapter overhangs (e.g., 341F/806R for V3-V4), high-fidelity DNA polymerase (e.g., Q5 Hot Start), dNTPs, PCR-grade water.

- Method:

- Prepare a 25-50 µL reaction mix per sample.

- Use a touchdown thermocycling protocol to minimize primer-dimer formation and improve specificity: Initial denaturation at 98°C for 30 sec; 25 cycles of: denaturation (98°C, 10 sec), annealing (start 65°C, decreasing 0.5°C per cycle to 55°C, 30 sec), extension (72°C, 20 sec); final extension at 72°C for 5 min.

- Verify amplification success and size (~550 bp for V3-V4) via agarose gel electrophoresis.

- Notes: Use a minimal number of cycles to reduce chimera formation. Include negative (no-template) controls.

Protocol 2: Illumina Library Preparation & Indexing

- Objective: To attach dual indices and sequencing adapters to amplicons.

- Reagents: Purified PCR product, Nextera XT Index Kit v2, library normalization beads.

- Method:

- Index PCR: Using a limited-cycle (8 cycles) PCR to attach unique dual indices (i5 and i7) and full adapter sequences to each sample's amplicon.

- Purification: Clean up indexed libraries using magnetic bead-based purification (e.g., AMPure XP beads) to remove primer dimers and short fragments.

- Quantification & Normalization: Quantify libraries using fluorometry (e.g., Qubit dsDNA HS Assay). Normalize libraries to equimolar concentration (e.g., 4 nM).

- Pooling & Final QC: Combine normalized libraries into a single sequencing pool. Validate pool size and concentration using a Bioanalyzer or TapeStation.

- Notes: Accurate normalization is critical for even sequencing depth across samples.

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Function & Explanation |

|---|---|

| DNA Extraction Kits (e.g., DNeasy PowerSoil, MO BIO) | Standardized, efficient lysis of diverse microbial cells (Gram+, Gram-, spores) and inhibitor removal from complex matrices like soil or feces. |

| High-Fidelity Polymerase (e.g., Q5, Phusion) | Essential for accurate amplification with low error rates, reducing sequence artifacts in final data. |

| 16S rRNA Gene Primers (e.g., 27F/338R, 341F/806R) | Target conserved regions flanking hypervariable zones; choice determines taxonomic resolution and amplicon length. |

| Indexing Kit (e.g., Illumina Nextera XT) | Provides unique dual barcodes (indices) to label each sample's amplicons, enabling multiplexing of hundreds of samples in one run. |

| Magnetic Bead Clean-up Kits (e.g., AMPure XP) | Size-selective purification of PCR products, removing primers, dimers, and non-specific fragments. |

| Fluorometric DNA Quantification (e.g., Qubit) | Accurate, specific quantification of double-stranded DNA, unaffected by contaminants like RNA or salts. |

| Bioanalyzer/TapeStation | Microfluidic capillary electrophoresis for precise assessment of library fragment size distribution and quality. |

| Positive Control Mock Community (e.g., ZymoBIOMICS) | Defined mix of known bacterial genomes; a critical control for DNA extraction, PCR bias, and bioinformatic pipeline accuracy. |

Data Analysis Pathway & Quantitative Data

Bioinformatic Data Processing Diagram

Diagram 2: 16S Data Analysis Pipeline

Table 1: Critical Parameters and Their Impact on Data

| Analysis Stage | Key Parameter | Typical Value/Range | Impact on Result |

|---|---|---|---|

| Sequencing | Read Depth (per sample) | 20,000 - 100,000 reads | Lower depth misses rare taxa; excessive depth yields diminishing returns. |

| Quality Filtering | Quality Score (Q) Threshold | Q ≥ 20, 25, or 30 | Higher threshold reduces errors but discards more data. |

| Denoising (DADA2) | maxEE (max expected errors) |

1-2 for forward/reverse | Looser filter retains more reads but increases erroneous sequences. |

| Clustering (OTUs) | Sequence Similarity Threshold | 97% (species-level) | 99% for finer resolution; 95% for genus-level. Defines taxonomic unit. |

| Taxonomy | Reference Database | SILVA, Greengenes, RDP | Database choice and version directly influence taxonomic labels. |

| Analysis | Rarefaction Depth | Often 10,000-30,000 reads | Normalizes sampling effort; choice can exclude samples with low counts. |

| Analysis | Alpha Diversity Metric | Shannon, Faith's PD, Observed ASVs | Shannon weighs richness & evenness; Faith's PD incorporates phylogeny. |

From Data to Insight: Statistical and Ecological Analysis

The final phase involves interpreting the generated feature table, taxonomy, and phylogeny.

Core Analyses:

- Alpha Diversity: Assesses within-sample richness and evenness. Metrics (see Table 1) are compared between sample groups using non-parametric tests (Kruskal-Wallis) or linear models.

- Beta Diversity: Measures between-sample compositional differences. Computed using distance matrices (e.g., Unweighted/Weighted UniFrac, Bray-Curtis). Visualized via PCoA ordination plots and tested for group significance with PERMANOVA.

- Differential Abundance: Identifies taxa significantly associated with experimental conditions. Use specialized methods (e.g., DESeq2, ANCOM-BC, LEfSe) that account for compositionality and sparse data, not standard t-tests.

- Functional Prediction: Infers potential metabolic capabilities from 16S data using tools like PICRUSt2 or Tax4Fun, which map taxonomy to reference genomes. These are predictions, not measurements.

This workflow, from rigorous wet-lab protocols to statistically-aware bioinformatics, forms the foundation for generating robust, reproducible insights into microbial community structure and dynamics, directly feeding into downstream hypothesis generation and validation in drug development and biomedical research.

The analysis of microbial communities via 16S rRNA gene sequencing is foundational to modern microbial ecology, human microbiome research, and drug development. A core analytical step involves clustering or differentiating sequence reads into biologically meaningful units. The evolution from Operational Taxonomic Units (OTUs) to Amplicon Sequence Variants (ASVs), also known as Zero-radius OTUs (ZOTUs), represents a paradigm shift in resolution, reproducibility, and analytical precision. This whitepaper details these key concepts within the broader thesis of 16S rRNA sequencing data analysis basics.

Core Concepts & Comparative Analysis

Definitions

- Operational Taxonomic Unit (OTU): A cluster of sequencing reads grouped based on a predefined sequence similarity threshold (typically 97%), intended to approximate a species-level grouping. This method inherently assumes that sequences differing by ≤3% belong to the same biological taxon.

- Amplicon Sequence Variant (ASV) / Zero-radius OTU (ZOTU): A biologically exact sequence derived from high-resolution error-correcting algorithms. ASVs are resolved without clustering by global similarity thresholds, distinguishing single-nucleotide differences and representing discrete biological sequences.

Quantitative Comparison Table

The following table summarizes the key differences between OTU and ASV approaches.

Table 1: Comparative Analysis of OTU vs. ASV Methodologies

| Feature | Operational Taxonomic Units (OTUs) | Amplicon Sequence Variants (ASVs/ZOTUs) |

|---|---|---|

| Basis of Definition | Clustering by percent similarity (e.g., 97%). | Exact biological sequences; error-corrected reads. |

| Primary Algorithm Type | Heuristic clustering (e.g., greedy, centroid-based). | Denoising or model-based error correction (e.g., DADA2, UNOISE3, Deblur). |

| Resolution | Low. Groups sequences with up to 3% divergence. | High. Distinguishes single-nucleotide differences. |

| Reproducibility | Low. Results vary with clustering algorithm, order of input, and similarity threshold. | High. Deterministic; same input yields identical ASVs across runs. |

| Handling of Sequencing Errors | Errors are clustered with true biological sequences, inflating diversity. | Errors are explicitly modeled and removed prior to variant calling. |

| Cross-Study Comparison | Difficult due to dataset-specific clustering. | Straightforward, as ASVs are comparable across studies. |

| Computational Demand | Generally lower for clustering itself, but may require subsampling. | Higher during denoising, but eliminates need for post-clustering chimera removal. |

| Interpretation | Approximates species or genus-level groups. | Can represent strain-level variation, actual DNA sequences. |

Detailed Methodological Protocols

Classic 97% OTU Clustering Protocol (QIIME1/MOTHUR)

This protocol outlines the traditional, reference-based 97% OTU picking strategy.

1. Preprocessing: Quality filter raw paired-end reads (e.g., Trimmomatic). Merge paired ends (e.g., USEARCH, FLASH). Demultiplex sequences. 2. Chimera Removal: Identify and remove chimeric sequences using UCHIME (in reference or de novo mode). 3. OTU Clustering: Pick OTUs against a reference database (e.g., Greengenes, SILVA) at 97% identity using a closed-reference algorithm (e.g., UCLUST, VSEARCH). Alternatively, perform de novo clustering on the entire dataset. 4. Representative Sequence Selection: Select the most abundant sequence within each cluster as the OTU representative. 5. Taxonomy Assignment: Assign taxonomy to each OTU representative using a classifier (e.g., RDP Classifier, BLAST) against a reference database. 6. OTU Table Construction: Generate a sample × OTU count matrix (BIOM format) by mapping all quality-filtered reads back to the OTU representatives.

ASV Inference Protocol (DADA2 Pipeline)

This protocol details a standard denoising workflow for inferring exact ASVs from Illumina data using the DADA2 algorithm.

1. Filter and Trim: Trim reads based on quality profiles. Filter sequences based on expected errors (maxEE parameter) and length.

2. Learn Error Rates: Model the error rates specific to the sequencing run using a machine-learning algorithm on a subset of data.

3. Dereplication: Combine identical reads into unique sequences with abundance counts.

4. Core Denoising: Apply the DADA2 algorithm to the dereplicated data. This corrects errors by using the error model to distinguish true biological sequences from erroneous ones, outputting a set of ASVs.

5. Merge Paired Reads: Merge forward and reverse reads of ASVs.

6. Remove Chimeras: Construct a sequence × sample abundance table and remove chimeric sequences identified de novo.

7. Taxonomy Assignment: Assign taxonomy to final ASVs using a Bayesian classifier (e.g., IdTaxa, RDP) against a reference database.

Visualizations

Conceptual Workflow Comparison

Diagram Title: OTU vs. ASV Analysis Workflow Comparison

Resolution & Reproducibility Logic

Diagram Title: Logic of the Shift from OTUs to ASVs

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagents and Materials for 16S rRNA Amplicon Sequencing Studies

| Item | Function/Brief Explanation |

|---|---|

| Primers (e.g., 515F/806R) | Target hypervariable regions (e.g., V4) of the bacterial/archaeal 16S rRNA gene for PCR amplification. |

| High-Fidelity DNA Polymerase | Ensures accurate amplification with low error rates during PCR, critical for ASV inference. |

| Mock Microbial Community | Defined mix of genomic DNA from known strains. Serves as a positive control for evaluating accuracy, precision, and bias in the wet-lab and bioinformatics pipeline. |

| Magnetic Bead-based Cleanup Kits | For post-PCR purification to remove primers, dNTPs, and enzymes prior to library quantification and sequencing. |

| Index/Barcode Oligonucleotides | Unique dual indices attached to amplicons via a second PCR to allow multiplexing of samples in a single sequencing run. |

| Sequencing Standards (e.g., PhiX) | Spiked into runs for Illumina platforms to improve base calling during sequencing of low-diversity amplicon libraries. |

| Reference Databases (SILVA, Greengenes, RDP) | Curated collections of aligned 16S rRNA sequences with taxonomy. Used for taxonomy assignment of OTU/ASV sequences. |

| Bioinformatics Pipelines (QIIME2, mothur, DADA2, USEARCH) | Software suites providing modular workflows for processing raw sequencing data into OTUs/ASVs and performing downstream analyses. |

This whitepaper deconstructs the primary outputs of 16S rRNA gene amplicon sequencing, a foundational method in microbial ecology and microbiomics. The broader thesis of the associated research is that rigorous interpretation of these three core data objects—the Feature Table, Taxonomy Assignment, and Phylogenetic Tree—is critical for generating biologically meaningful insights from microbial community data. Mastery of these outputs enables researchers and drug development professionals to formulate and test hypotheses about microbiome composition, function, and dynamics in health, disease, and therapeutic intervention.

The Core Outputs: Definitions and Interrelationships

Feature Table (Amplicon Sequence Variant or OTU Table)

The Feature Table is a quantitative, sample-by-feature matrix that forms the bedrock of analysis. A "feature" is typically an Amplicon Sequence Variant (ASV) or an Operational Taxonomic Unit (OTU), representing a unique biological sequence inferred to originate from a distinct microbial organism or genotype.

- ASV: An exact, error-corrected sequence read. Offers higher resolution and reproducibility.

- OTU: A cluster of sequences grouped by a similarity threshold (e.g., 97%). A more traditional, less precise method.

The table's cells contain the frequency (count) of each feature in each sample. It is the primary input for diversity and differential abundance analyses.

Table 1: Key Characteristics of ASVs vs. OTUs

| Characteristic | Amplicon Sequence Variant (ASV) | Operational Taxonomic Unit (OTU) |

|---|---|---|

| Definition | Exact biological sequence (single nucleotide resolution). | Cluster of sequences at a defined % similarity (e.g., 97%). |

| Resolution | High, enables strain-level discrimination. | Lower, species to genus level. |

| Methodology | Error-correction via DADA2, Deblur, UNOISE. | Clustering via VSEARCH, USEARCH, CD-HIT. |

| Reproducibility | High; results are consistent across runs. | Variable; depends on clustering algorithm/parameters. |

| Computational Demand | Higher. | Lower. |

Taxonomy Assignment

This is the process of labeling each feature (ASV/OTU) with a taxonomic classification (e.g., Kingdom, Phylum, Class, Order, Family, Genus, Species). Assignments are made by comparing feature sequences to reference databases using classification algorithms.

Key Reference Databases:

- SILVA: Comprehensive, curated database for ribosomal RNA genes.

- Greengenes: 16S-specific database, now less frequently updated.

- RDP (Ribosomal Database Project): Includes a robust Naïve Bayesian classifier.

- NCBI RefSeq: Broad, non-curated, but extensive.

Table 2: Common Taxonomy Classifiers and Databases

| Classifier | Principle | Common Paired Database | Typical Confidence Threshold |

|---|---|---|---|

QIIME 2's feature-classifier |

Machine learning (sklearn) on extracted reference reads. | SILVA, Greengenes | N/A (provides confidence per assignment) |

DADA2's assignTaxonomy |

Naïve Bayesian Classifier (RDP method). | SILVA, RDP | ≥80% recommended |

vsearch --sintax |

SINTAX algorithm, based on k-mer matching. | SILVA | ≥0.8 confidence score |

| BLAST+ | Local sequence alignment heuristic. | NCBI nt | ≥97% identity, ≥90% query coverage |

Phylogenetic Tree

A branching diagram that represents the evolutionary relationships among the features in the Feature Table. It is constructed based on sequence similarity of the 16S rRNA gene. The tree is essential for analyses that incorporate evolutionary history, such as:

- Phylogenetic Diversity Metrics: Faith's PD.

- UniFrac Distances: Measures community dissimilarity weighted by phylogenetic divergence (weighted UniFrac) or just presence/absence along branches (unweighted UniFrac).

Experimental Protocol: From Raw Sequences to Core Outputs

Protocol Title: Standardized QIIME 2 Pipeline for 16S rRNA Analysis

This protocol outlines the generation of all three core outputs from demultiplexed paired-end FASTQ files.

1. Demultiplexing & Primer Removal: (If not already done) Use q2-demux or cutadapt to assign reads to samples and remove sequencing adapters and PCR primers.

2. Import Data: Import data into QIIME 2 artifact format (qiime tools import).

3. Denoising & Feature Table Generation (DADA2):

Outputs: table.qza (Feature Table) and rep-seqs.qza (representative sequences for each feature).

4. Taxonomy Assignment:

5. Phylogenetic Tree Construction:

Final Outputs: table.qza (Feature Table), taxonomy.qza (Taxonomy Assignment), rooted-tree.qza (Phylogenetic Tree).

Visualizing the Analysis Workflow

Title: 16S rRNA Analysis Core Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Reagents and Materials for 16S rRNA Sequencing Workflow

| Item | Function & Description |

|---|---|

| 16S rRNA Gene Primer Set (e.g., 515F/806R for V4 region) | Targets conserved regions flanking hypervariable regions for specific PCR amplification of the bacterial 16S gene. |

| High-Fidelity DNA Polymerase (e.g., Phusion, KAPA HiFi) | Reduces PCR errors to ensure accurate sequence representation prior to sequencing. |

| Magnetic Bead-based Cleanup Kit (e.g., AMPure XP) | For precise size selection and purification of PCR amplicons, removing primer dimers and contaminants. |

| Dual-Indexed Sequencing Adapters (Nextera XT, Illumina) | Allows multiplexing of hundreds of samples in a single sequencing run by attaching unique barcodes to each. |

| Quantification Kit (e.g., Qubit dsDNA HS Assay) | Accurate fluorometric quantification of DNA library concentration for optimal sequencing loading. |

| PhiX Control v3 (Illumina) | Serves as a quality control for cluster generation, sequencing, and alignment on Illumina platforms. |

| Standardized Mock Microbial Community DNA (e.g., ZymoBIOMICS) | Positive control containing known, sequenced genomes to assess accuracy of entire wet-lab and bioinformatics pipeline. |

| DNA/RNA Shield or Similar Preservation Buffer | Stabilizes microbial community snapshots at the point of sample collection, preventing shifts. |

Within the foundational research on 16S rRNA sequencing data analysis, reference databases serve as the critical taxonomic backbone. They enable the translation of raw genetic sequences into biologically meaningful classifications, forming the basis for understanding microbial community composition and dynamics. This whitepaper provides an in-depth technical guide to four pivotal databases: Greengenes, SILVA, the Ribosomal Database Project (RDP), and the Genome Taxonomy Database (GTDB). Their curation philosophies, update statuses, and applications directly influence downstream interpretations in research and drug development, where accurate microbial profiling can inform therapeutic targets and diagnostic markers.

The four databases differ in scope, curation methodology, and underlying taxonomy, leading to significant implications for analysis outcomes.

Table 1: Core Characteristics of Major 16S rRNA Reference Databases

| Feature | Greengenes | SILVA | RDP | GTDB |

|---|---|---|---|---|

| Primary Focus | 16S rRNA gene (V4 hypervariable region emphasized) | Comprehensive rRNA (16S/18S/28S) genes | 16S rRNA gene with fungal 28S | Genome-based taxonomy for Bacteria & Archaea |

| Current Version | 13_8 (2013, deprecated) | SSU r138.1 (2020, semi-curated) | RDP 11.5 (2016, update paused) | R220 (October 2023, actively updated) |

| Taxonomy Source | De novo alignment and tree-based classification | Manually curated, aligned with LTP and Bergey's | Naïve Bayesian classifier training set | Phylogenomic consensus from 120+ ubiquitous proteins |

| Alignment | NAST-based, length ~1,200 bases | SINA aligner, length ~50,000 bases | Inferred secondary structure, length variable | Not applicable (whole genome focus) |

| Number of Taxa | ~1.3 million 16S sequences, ~0.5M clustered (99%) | ~2.1 million small subunit sequences | ~3.3 million 16S sequences, hierarchically classified | ~47,000 bacterial & archaeal genome assemblies |

| Strengths | Historical standard, reproducible legacy analyses | Broad phylogenetic range, high-quality manual curation | Excellent online analysis tools, fungal inclusion | Revolutionarily consistent, genome-resolved taxonomy |

| Limitations | No longer updated; outdated taxonomy | Curation lags behind sequence submission; large size | Update paused; may miss novel diversity | Not directly for short 16S fragments; requires pplacer |

Table 2: Quantitative Database Performance Metrics (Generalized from Benchmark Studies)

| Metric | Greengenes | SILVA | RDP | GTDB |

|---|---|---|---|---|

| Classification Accuracy (Genus-level, Mock Community) | ~85%* | ~92% | ~89% | ~95% (with proper fragment mapping) |

| Computational Resource Demand | Low | Very High | Medium | High (for genome placement) |

| Update Frequency | None (static) | ~1-2 years | None (static) | ~3-4 months |

| Coverage of Novel Diversity | Low | Medium-High | Medium | High (for cultured/sequenced genomes) |

Note: Accuracy is context-dependent on the hypervariable region and sample type. GTDB excels when the underlying organism has a representative genome.

Detailed Methodologies for Database-Centric Analysis

Protocol: Taxonomic Classification with a Reference Database

This protocol outlines the standard workflow for classifying 16S rRNA amplicon sequences using QIIME 2 and a reference database.

Materials & Reagents:

- Demultiplexed Paired-end Sequence Reads (FASTQ format).

- Reference Database (e.g., SILVA SSU r138 formatted for QIIME 2).

- QIIME 2 Core Distribution (version 2024.2 or later).

- Computational Resources: Minimum 8 GB RAM, multi-core processor.

Procedure:

- Sequence Quality Control and Feature Table Construction:

Taxonomic Classification using a Pre-trained Classifier: Download and install a pre-formatted SILVA classifier:

Perform classification:

Generation of Visual Reports:

Protocol: Phylogenetic Placement of Sequences into the GTDB Reference Tree

This protocol describes placing 16S sequences into the GTDB genome-based phylogenetic framework using pplacer.

Materials & Reagents:

- Query Sequences: Representative 16S sequences (FASTA).

- GTDB Reference Package: Contains the reference tree (Bac120/Ar53) and alignment model. Downloaded from GTDB website.

pplacersoftware suite (v1.1.alpha19 or later).TAXTKutility (for taxonomic assignment from placement).

Procedure:

- Prepare the Query Sequences:

Align your 16S sequences to the GTDB reference alignment using

hmmalign(part of the HMMER package) with the provided bacterial or archaeal HMM profile.

Convert the Alignment to

pplacerInput (FASTA):Run Phylogenetic Placement with

pplacer:This generates a

.jplacefile containing the placement positions on the reference tree.- Assign Taxonomy:

Use

guppy(from thepplacersuite) orTAXTKto assign taxonomy based on the placements.

Visualizations

Diagram 1: 16S Analysis Workflow from Data to Taxonomy via Four Key Databases

Diagram 2: Data Sources and Curation Relationships for Reference Databases

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for 16S rRNA Database-Centric Experiments

| Item | Function in Protocol | Example Product/Source |

|---|---|---|

| 16S rRNA Gene Primer Mix (V4 Region) | Amplifies the target hypervariable region from genomic DNA for Illumina sequencing. | 515F (Parada)/806R (Apprill) from IDT. |

| High-Fidelity DNA Polymerase Mix | Ensures accurate amplification with minimal PCR errors for downstream sequence analysis. | KAPA HiFi HotStart ReadyMix (Roche). |

| Quant-iT PicoGreen dsDNA Assay Kit | Precisely quantifies double-stranded DNA library concentration before sequencing. | Thermo Fisher Scientific, P7589. |

| PhiX Control v3 | Serves as a spike-in internal control for Illumina run quality monitoring and phasing/prephasing calculation. | Illumina, FC-110-3001. |

| Qubit dsDNA HS Assay Kit | Accurate quantification of low-concentration DNA samples (e.g., post-PCR cleanup). | Thermo Fisher Scientific, Q32854. |

| MiSeq Reagent Kit v3 (600-cycle) | Provides all chemicals, flow cell, and buffers for 2x300 bp paired-end sequencing on MiSeq. | Illumina, MS-102-3003. |

| Nextera XT Index Kit | Attaches dual indices (barcodes) to amplified libraries for multiplexed sequencing. | Illumina, FC-131-1096. |

| AMPure XP Beads | Performs size selection and cleanup of sequencing libraries, removing primers and adapter dimers. | Beckman Coulter, A63881. |

| DNeasy PowerSoil Pro Kit | Standardized, high-yield extraction of microbial genomic DNA from complex sample types (soil, stool). | Qiagen, 47014. |

| ZymoBIOMICS Microbial Community Standard | Defined mock microbial community used as a positive control to validate entire workflow accuracy. | Zymo Research, D6300. |

Your Step-by-Step 16S Analysis Pipeline: From Raw FASTQ to Biological Insight

Within the foundational research of 16S rRNA sequencing data analysis, selecting an appropriate bioinformatics pipeline is a critical first step that dictates the quality, reproducibility, and biological interpretation of results. This guide provides an in-depth technical comparison of three predominant platforms: QIIME 2, mothur, and DADA2, framing their use within a standard analytical workflow for microbial community studies.

Core Pipeline Architectures and Methodologies

The three tools represent two distinct philosophical approaches: mothur and QIIME 2 are comprehensive, all-in-one workflow suites, while DADA2 is a specialized, R-based package focused on the initial step of inferring exact amplicon sequence variants (ASVs).

1. QIIME 2 (Quantitative Insights Into Microbial Ecology)

- Protocol: QIIME 2 operates via a plugin architecture. A core denoising protocol using the DADA2 or Deblur plugins involves: i) importing demultiplexed sequence files (e.g., FASTQ), ii) primer trimming, iii) quality filtering, denoising, and chimera removal to produce ASVs, iv) clustering ASVs into an OTU table (if desired), v) assigning taxonomy using a pre-trained classifier (e.g., Silva, Greengenes), and vi) generating a feature table for downstream analysis.

- Key Innovation: Reproducible, traceable analysis through immutable data artifacts and provenance tracking.

2. mothur (Schloss et al.)

- Protocol: The standard mothur SOP (Standard Operating Procedure) is a sequential command-line process: i) processing raw FASTQ files (

make.contigs), ii) rigorous filtering and alignment to a reference database (e.g., Silva), iii) pre-clustering to reduce noise, iv) chimera removal (e.g.,chimera.vsearch), v) clustering sequences into OTUs based on a distance cutoff (typically 97% similarity), and vi) taxonomic classification using the naive Bayesian classifier. - Key Innovation: A single, unified command-line environment designed to be a complete pipeline, emphasizing community standards and SOPs.

3. DADA2 (Divisive Amplicon Denoising Algorithm)

- Protocol: Executed within R, the core workflow includes: i) inspecting read quality profiles, ii) filtering and trimming, iii) learning the error rate model from the data, iv) dereplication, v) sample inference to identify exact ASVs, vi) merging paired-end reads, vii) removing chimeras, and viii) assigning taxonomy. It outputs a sequence table of ASVs.

- Key Innovation: A parametric error model that infers true biological sequences at single-nucleotide resolution, moving beyond traditional clustering.

Quantitative Comparison of Pipeline Outputs

The choice between OTU (mothur, QIIME 2 option) and ASV (DADA2, QIIME 2 option) methods impacts downstream metrics.

Table 1: Characteristic Output Metrics for a Representative 16S Dataset (V4 Region, 250bp reads, 10M total sequences)

| Feature | mothur (OTU, 97%) | QIIME 2 (Deblur ASV) | DADA2 (ASV) |

|---|---|---|---|

| Typical Output Units | Operational Taxonomic Units (OTUs) | Amplicon Sequence Variants (ASVs) | Amplicon Sequence Variants (ASVs) |

| Resolution | ~97% similarity clusters | Single-nucleotide | Single-nucleotide |

| Avg. Features per Sample | 150 - 300 | 200 - 400 | 180 - 380 |

| Chimera Removal Rate | 5-15% | Integrated in denoising | 5-20% |

| Key Strength | Highly standardized, reproducible SOP | Full workflow with provenance | High resolution, precise sequence inference |

Workflow Logic and Decision Pathway

The following diagram illustrates the logical relationship and primary decision points between these tools within a research thesis framework.

Title: Decision Pathway for 16S rRNA Analysis Pipeline Selection

Table 2: Key Resources for 16S rRNA Sequencing Analysis

| Item | Function in Analysis | Example/Note |

|---|---|---|

| Reference Database | For taxonomic assignment of sequence features. | SILVA, Greengenes, RDP. Required for taxonomy steps in all pipelines. |

| Classifier | Pre-trained machine learning model for taxonomy. | q2-feature-classifier (QIIME 2), wang method (mothur), assignTaxonomy (DADA2). |

| Alignment Template | Reference alignment for phylogenetic placement. | Required for mothur's align.seqs and phylogenetic diversity metrics. |

| Chimera Reference | Clean reference sequences for chimera checking. | Used by chimera.vsearch (mothur) or removeBimeraDenovo (DADA2). |

| Positive Control Mock Community | Validates pipeline accuracy for known composition. | Essential for benchmarking error rates and bioinformatics SOPs. |

| Negative Control | Identifies reagent or environmental contaminants. | Informs pipeline steps for contaminant removal (e.g., decontam R package). |

| Conda Environment | Manages isolated, reproducible software installations. | qiime2-2024.5 distribution, bioconda channels for mothur/DADA2. |

This whitepaper details the foundational first step within a comprehensive thesis on 16S rRNA sequencing data analysis. For researchers and drug development professionals, robust initial processing is critical for generating accurate microbial community profiles. This guide covers contemporary methodologies for Quality Control (QC), denoising, and primer trimming, which collectively transform raw sequencing reads into a reliable feature table for downstream ecological and statistical analysis.

In 16S rRNA amplicon sequencing, raw data from platforms like Illumina MiSeq or NovaSeq contains inherent noise, sequencing errors, and artificial sequences from PCR primers. The primary objective of Step 1 is to distinguish true biological signal from technical noise. This process directly influences all subsequent conclusions regarding microbial diversity, abundance, and differential expression in therapeutic contexts.

Quality Control (QC) of Raw Sequences

Initial QC assesses read quality to determine filtering parameters and diagnose sequencing run issues.

Key Quantitative Metrics

Table 1: Core QC Metrics for Illumina Paired-End 16S Data (V3-V4 Region)

| Metric | Optimal Value/Range | Tool for Assessment | Implication of Deviation |

|---|---|---|---|

| Per-base Sequence Quality (Phred Score, Q) | Q ≥ 30 for majority of cycles | FastQC, MultiQC | High error rate; increased false OTUs/ASVs |

| Total Reads per Sample | ≥ 10,000 (Min.) | Demultiplexed output | Insufficient sequencing depth |

| Read Length | ~250-300 bp (2x150bp PE common) | FastQC | Short reads may not span target region |

| GC Content | ~50-60% (Bacteria-specific) | FastQC | Contamination or adapter presence |

| Adapter Content | 0% | FastQC | Requires aggressive adapter trimming |

| % of Bases ≥ Q30 | > 80% | FastQC, vendor software | Overall run quality indicator |

Experimental Protocol: Initial QC with FastQC & MultiQC

- Input: Demultiplexed paired-end FASTQ files (

sample_R1.fastq.gz,sample_R2.fastq.gz). Tool Execution:

Aggregate Report Generation:

Analysis: Visually inspect the

multiqc_report.htmlfor consistent quality profiles across samples. Note regions where median quality drops below Q20.

Primer Trimming

Primer sequences must be accurately identified and removed, as their presence interferes with read merging and causes mis-clustering.

Detailed Methodology

Table 2: Primer Trimming Tools and Protocols

| Tool | Algorithm/Key Feature | Command Example (for cutadapt) | Rationale |

|---|---|---|---|

| cutadapt (v4.0+) | Alignment with error tolerance. | cutadapt -g GTGYCAGCMGCCGCGGTAA -G GGACTACNVGGGTWTCTAAT -o trim_R1.fastq -p trim_R2.fastq raw_R1.fastq raw_R2.fastq |

Precise, allows indels and mismatches. |

| Atropos | Improved multithreading. | Similar syntax to cutadapt. | Faster for large datasets. |

| DADA2 (within R) | removePrimers() function. |

dada2::removePrimers(fnF, fnR, primer.fwd, primer.rev) |

Integrates directly into DADA2 pipeline. |

Protocol (using cutadapt):

- Identify Primer Sequences: e.g., 515F (

GTGYCAGCMGCCGCGGTAA) and 806R (GGACTACNVGGGTWTCTAAT) for Earth Microbiome Project. - Run Trim: Command shown in Table 2. The

-gand-Gflags specify forward and reverse primer sequences. - Output: Primer-trimmed FASTQ files. Discard reads where primers are not found.

Denoising: From Reads to Amplicon Sequence Variants (ASVs)

Denoising infers the true biological sequences present, correcting sequencing errors without clustering at an arbitrary similarity threshold.

Comparative Analysis of Denoising Algorithms

Table 3: Contemporary Denoising Tools (2023-2024)

| Tool / Pipeline | Core Algorithm | Error Model | Key Output | Primary Citation |

|---|---|---|---|---|

| DADA2 (v1.28) | Divisive, partition-based. | Learn from data via sample inference. | Amplicon Sequence Variants (ASVs). | Callahan et al., Nat Methods, 2016. |

| deblur (v1.1.0) | Error-profile-based. | Uses a positive filter (static). | Sub-OTUs (effectively ASVs). | Amir et al., mSystems, 2017. |

| UNOISE3 (vsearch) | Greedy clustering, discards "noise". | Denoising by abundance threshold. | ZOTUs (Zero-radius OTUs). | Edgar, bioRxiv, 2016. |

| QIIME 2 w/ DADA2 | Wrapper for DADA2. | As per DADA2. | ASVs within QIIME 2 artifact. | Bolyen et al., Nat Biotechnol, 2019. |

Experimental Protocol: Denoising with DADA2 in R

Filter and Trim: Based on QC report, truncate reads where quality crashes.

Learn Error Rates: Model the error profile from a subset of data.

Dereplicate and Denoise:

Merge Paired Reads:

Construct Sequence Table: This is the final denoised feature table.

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for 16S Library Prep and Sequencing

| Item | Function | Example Vendor/Kit |

|---|---|---|

| PCR Primers (V3-V4) | Amplify target hypervariable region of 16S gene. | Illumina 16S Metagenomic Sequencing Library Prep (515F/806R). |

| High-Fidelity DNA Polymerase | Accurate amplification with low error rate. | KAPA HiFi HotStart ReadyMix. |

| Magnetic Bead Clean-up Kit | Size selection and purification of amplicons. | AMPure XP Beads. |

| Indexing Adapters (Nextera XT) | Dual indexing for sample multiplexing. | Illumina Nextera XT Index Kit v2. |

| Library Quantification Kit | Accurate measurement of library concentration for pooling. | Qubit dsDNA HS Assay Kit. |

| PhiX Control v3 | Spiked-in for run quality monitoring on Illumina. | Illumina PhiX Control Kit. |

| MiSeq Reagent Kit v3 (600-cycle) | Provides chemistry for 2x300bp paired-end sequencing. | Illumina MS-102-3003. |

Visualized Workflows

Title: 16S Data Denoising Workflow from FASTQ to ASVs

Title: Denoising Concept: From Noisy Reads to Precise ASVs

This whitepaper constitutes the second core chapter of a broader thesis on 16S rRNA sequencing data analysis basics research. Following initial data preprocessing (Step 1), the accurate inference of exact biological sequences, or Amplicon Sequence Variants (ASVs), from noisy sequencing data is the critical next step. This step moves beyond clustering sequences by arbitrary similarity thresholds (e.g., 97% for Operational Taxonomic Units) to resolve single-nucleotide differences, providing higher resolution for downstream ecological and clinical analysis. Within the drug development pipeline, precise microbial profiling can identify biomarkers for patient stratification, monitor microbiome modulation therapies, and uncover novel microbial targets.

Core Algorithmic Frameworks: DADA2 vs. Deblur

DADA2: Divisive Amplicon Denoising Algorithm

DADA2 models the process of amplicon sequencing as a parametric error model. It learns the specific error rates of a sequencing run from the data itself and uses this model to distinguish between true biological sequences and erroneous reads derived from PCR and sequencing errors.

Key Protocol:

- Error Model Learning: The algorithm estimates the error rates for each possible nucleotide transition (e.g., A->C, A->G, A->T) for each sequence position from a subset of high-quality reads. This creates a position-specific substitution error matrix.

- Sample Inference: For each unique sequence in a sample, DADA2 computes its abundance p-value. This tests the null hypothesis that all reads of a sequence are erroneous derivatives of a more abundant sequence.

- Partitioning (Divisive Clustering): Reads are iteratively partitioned into "cores" and "clouds." The core contains reads of the putative true sequence, while the cloud contains putative errors. This continues until no further significant partitions can be made.

- Chimera Removal: A de novo chimera check is performed post-inference using the

removeBimeraDenovofunction, which identifies chimeras as sequences that can be constructed from left and right segments of more abundant parent sequences.

Deblur: A Substitution-Error-Centric Approach

Deblur uses a positive filtering approach, focusing aggressively on removing erroneous reads to retain only those deemed "real" based on known error profiles and prior abundances.

Key Protocol:

- Initial Quality Filtering: Reads are trimmed to a specified length. All reads are shifted to a consistent starting point via alignment.

- Error Profile Application: A pre-determined or user-provided error profile (typically derived from mock community data) is used to predict the likely number of erroneous reads for each observed sequence.

- Iterative Read Subtraction (Deblurring): Reads are sorted by abundance. Starting with the most abundant sequence, its expected number of erroneous derivatives (based on the error profile and its abundance) is calculated and subtracted from the counts of less abundant, similar sequences. This process iterates through all sequences.

- Output: The remaining sequences, after all expected errors have been "deblurred" away, are reported as the true biological sequences.

Comparative Performance Data

Table 1: Benchmarking DADA2 and Deblur on Mock Community Data (Summarized from Recent Studies).

| Metric | DADA2 | Deblur | Notes |

|---|---|---|---|

| Recall (Sensitivity) | High (>95%) | Very High (>98%) | Deblur's aggressive filtering can retain more true rare variants. |

| Precision (Positive Predictive Value) | Very High (>99%) | High (>97%) | DADA2's statistical model minimizes false positives. |

| Computational Speed | Moderate | Fast | Deblur is typically faster, especially on large datasets. |

| Memory Usage | Higher | Lower | DADA2's model-fitting requires more RAM. |

| Handling of Indels | Models them explicitly | Removes reads with indels | DADA2 can infer sequences with genuine insertions/deletions. |

| Dependence on Error Profile | Learns from data (sample-specific) | Relies on provided profile | Deblur may require a suitable error profile for optimal results. |

| Output Resolution | ASVs | ASVs | Both provide single-nucleotide resolution. |

Table 2: Typical Reagent and Workflow Costs per Sample (Approximate, Illumina Platform).

| Cost Component | DADA2 Workflow | Deblur Workflow | Function |

|---|---|---|---|

| 16S PCR Reagents | $15 - $25 | $15 - $25 | Amplification of target hypervariable region. |

| Library Prep & Indexing | $20 - $40 | $20 - $40 | Attaching sequencing adapters and sample barcodes. |

| MiSeq Reagent Kit (v3, 600-cycle) | ~$1,200 per run (~$12-24/sample at 50-100 plex) | ~$1,200 per run (~$12-24/sample at 50-100 plex) | Sequencing chemistry. Cost is distributed across multiplexed samples. |

| Bioinformatics Compute | $0.50 - $2.00 | $0.25 - $1.00 | Cloud/Cluster costs for processing. Deblur is generally more cost-efficient. |

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Research Reagent Solutions for 16S Sequencing & Variant Inference.

| Item | Category | Function in ASV Inference Workflow |

|---|---|---|

| Mock Microbial Community (e.g., ZymoBIOMICS) | Control Standard | Contains known, quantified strains. Essential for validating the accuracy (precision/recall) of DADA2/Deblur pipelines. |

| PhiX Control v3 | Sequencing Control | Spiked into runs (1-5%) for Illumina platform error rate monitoring and base calling calibration. |

| KAPA HiFi HotStart ReadyMix | PCR Reagent | High-fidelity polymerase minimizes PCR errors introduced prior to sequencing, improving downstream variant inference. |

| Nextera XT Index Kit | Library Prep | Attaches dual indices for sample multiplexing, allowing pooled sequencing of hundreds of samples. |

| MiSeq Reagent Kit v3 (600-cycle) | Sequencing | Standard chemistry for 2x300bp paired-end reads, covering most 16S hypervariable regions. |

| Qubit dsDNA HS Assay Kit | Quantification | Accurately measures DNA library concentration for optimal loading on the sequencer. |

| DADA2 (R package) | Bioinformatics | Primary software for the DADA2 denoising algorithm. Performs filtering, error learning, inference, and chimera removal. |

| QIIME 2 (with Deblur plugin) | Bioinformatics | A comprehensive microbiome analysis platform that incorporates Deblur as a core plugin for ASV inference. |

| Cutadapt | Bioinformatics | Removes primer/adapter sequences. Critical pre-processing step before DADA2/Deblur. |

Workflow Visualization

Diagram 1: ASV Inference Workflow Comparison (DADA2 vs. Deblur)

Diagram 2: Deblur's Iterative Read Subtraction Logic

Within the foundational research pipeline for 16S rRNA sequencing data analysis, taxonomic classification is the critical step that assigns sequence reads to their likely biological origins (e.g., phylum, genus, species). Following quality control (Step 1) and OTU/ASV clustering (Step 2), this step transforms molecular data into biologically interpretable information. The Naive Bayes (NB) classifier has emerged as a standard, computationally efficient probabilistic method for this task, balancing accuracy with speed, which is essential for handling millions of sequences. This guide details its technical implementation, relevant to researchers and drug development professionals seeking to understand microbial community composition in contexts like dysbiosis studies or biomarker discovery.

Core Algorithm: The Naive Bayes Model for 16S rRNA

The NB classifier applies Bayes' Theorem under the "naive" assumption of feature (k-mer) independence. For a given query sequence (Q), it calculates the posterior probability of belonging to taxon (T) from a set of reference sequences.

Bayesian Framework: [ P(T|Q) = \frac{P(Q|T) \cdot P(T)}{P(Q)} ] Where:

- (P(T|Q)): Posterior probability (taxon given sequence).

- (P(Q|T)): Likelihood (sequence given taxon).

- (P(T)): Prior probability (prevalence of taxon).

- (P(Q)): Evidence (constant across taxa).

The classifier selects the taxon (T) that maximizes (P(T|Q)). Since (P(Q)) is constant, the decision rule becomes: [ \hat{T} = \arg\max_{T} \left[ P(T) \cdot P(Q|T) \right] ]

The sequence (Q) is represented as a set of (k)-mers (subsequences of length (k)). Under the independence assumption: [ P(Q|T) \approx \prod{i=1}^{n} P(ki|T) ] To avoid floating-point underflow, calculations are performed in log space: [ \hat{T} = \arg\max{T} \left[ \log P(T) + \sum{i=1}^{n} \log P(k_i|T) \right] ]

Experimental Protocols & Methodologies

3.1. Reference Database Curation & Training

- Objective: Build a trained classification model from a curated reference database.

- Protocol:

- Database Selection: Download a targeted 16S database (e.g., SILVA, Greengenes, RDP). For clinical/drug development contexts, ensure database versioning matches known clinically relevant taxa.

- Region Extraction: Use a positional filter (e.g., Escherichia coli position 341F-805R) to extract the hypervariable region(s) matching your sequencing protocol.

- k-mer Profiling: For each reference sequence, decompose its sequence into all possible overlapping k-mers (typical k=8 or k=12). Build a frequency table for each taxon.

- Prior Calculation: (P(T)) can be set as uniform or weighted by sequence abundance in the database.

- Smoothing: Apply additive (Laplace) smoothing to (P(ki|T)) to handle k-mers not observed in training data, preventing zero probabilities. [ P(ki|T) = \frac{\text{count}(ki, T) + \alpha}{\sum{j}(\text{count}(k_j, T) + \alpha)} ] where (\alpha) is a small positive constant (e.g., 1).

3.2. Classification of Query Sequences

- Objective: Assign taxonomy to a set of query ASVs/OTUs from a sample.

- Protocol:

- Input Preparation: Provide the FASTA file of representative sequences from Step 2 (Clustering).

- k-mer Decomposition: Decompose each query sequence into the same k-mers used in training.

- Probability Calculation: For each query sequence and each candidate taxon, compute the log-likelihood sum of its k-mers.

- Assignment & Bootstrapping: Assign the taxon with the highest log-probability. To estimate confidence, perform bootstrapping (e.g., 100 iterations): repeatedly classify using a random subset (with replacement) of the query's k-mers. Report the consensus taxonomy and the bootstrap confidence percentage (e.g., ≥80% for confident genus-level assignment).

- Output: Generate a taxonomy assignment table and a confidence report.

Data Presentation: Performance Metrics

Table 1: Comparative Performance of Naive Bayes Classifiers on Mock Community Data

| Classifier Tool (NB Variant) | Reference Database | Average Genus-Level Accuracy* (%) | Computational Speed (Reads/sec) | Key Optimal Parameter |

|---|---|---|---|---|

| RDP Classifier (k-mer based) | RDP Training Set v18 | 96.5 | ~85,000 | k=8, bootstrap threshold=80% |

QIIME2's feature-classifier (sklearn NB) |

SILVA 138.1 (99% OTUs) | 97.8 | ~42,000 | k=7, alpha (smoothing)=0.01 |

DADA2's assignTaxonomy (k-mer based) |

GTDB r207 | 98.1 | ~38,000 | k=8, minBoot=50 |

| Kraken2 (Exact k-mer matching) | Custom 16S Index | 95.2 | ~150,000 | k=35, database size critical |

*Accuracy based on defined mock community benchmarks (e.g., ZymoBIOMICS, ATCC MSA-1003).

Table 2: Impact of k-mer Length on Classification

| k-mer Length | Specificity (Precision) | Sensitivity (Recall) | Runtime | Recommended Use Case |

|---|---|---|---|---|

| k=7 | Lower | Higher | Fastest | Shorter reads (<250bp), maximizing recall |

| k=8 (Default) | Balanced | Balanced | Fast | General use for V3-V4 (~400-450bp) |

| k=12 | Higher | Lower | Slower | Long reads (Full-length 16S), maximizing precision |

Visualizations

Title: Naive Bayes Classifier Workflow for 16S rRNA

Title: Naive Bayes Mathematical Foundation

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Tools for Taxonomic Classification

| Item/Category | Example Product/Resource | Function in Classification |

|---|---|---|

| Curated Reference Database | SILVA SSU 138.1, Greengenes 13_8, RDP, GTDB | Provides the gold-standard, taxonomically annotated sequence set for training the classifier model. Critical for accuracy. |

| Bioinformatics Suite | QIIME2 (via feature-classifier), Mothur (classify.seqs), DADA2 (assignTaxonomy) |

Provides the computational framework and optimized pipelines to execute the Naive Bayes algorithm on large sequence sets. |

| Mock Community Control | ZymoBIOMICS Microbial Community Standard, ATCC MSA-1003 | Validates the entire bioinformatics pipeline, allowing calibration and accuracy benchmarking of the classifier against known composition. |

| High-Performance Computing (HPC) | Local cluster (SLURM), Cloud (AWS EC2, Google Cloud) | Provides the necessary CPU and memory resources for rapid processing of large-scale 16S amplicon studies (thousands of samples). |

| Classification Confidence Threshold | Bootstrap support (typically 80% for genus) | A configurable parameter that filters out low-confidence assignments, increasing specificity at the potential cost of sensitivity. |

Within the framework of a foundational thesis on 16S rRNA sequencing data analysis, the generation of core ecological metrics represents the pivotal transition from raw sequence data to interpretable biological insights. This step quantifies microbial diversity, a cornerstone for hypotheses in therapeutic development, personalized medicine, and mechanistic studies. This guide details the current methodologies for Alpha and Beta Diversity analysis.

Defining Core Diversity Metrics

Diversity metrics are categorized based on what they measure:

- Alpha Diversity: The diversity within a single sample or community. It is a reflection of richness (number of species) and evenness (abundance distribution).

- Beta Diversity: The difference between samples or communities. It measures the compositional dissimilarity.

Experimental Protocols for Analysis

The following workflow is standard for deriving diversity metrics from a processed Amplicon Sequence Variant (ASV) or Operational Taxonomic Unit (OTU) table.

Protocol 1: Core Diversity Analysis Workflow using QIIME 2

- Input: A filtered, rarefied feature table (ASV/OTU) and associated phylogenetic tree (for phylogenetic metrics).

- Alpha Diversity Calculation:

- Execute

qiime diversity core-metrics-phylogenetic(for integrated analysis) orqiime diversity alphafor specific indices. - Select a rarefaction depth determined from earlier rarefaction curves to ensure even sampling.

- Specify desired alpha diversity indices (e.g., observedfeatures, shannon, faithpd).

- Execute

- Beta Diversity Calculation:

- The same command (

core-metrics-phylogenetic) generates distance matrices (e.g., Jaccard, Bray-Curtis, weighted/unweighted UniFrac). - For non-phylogenetic metrics, use

qiime diversity beta.

- The same command (

- Statistical Testing:

- Alpha: Use

qiime diversity alpha-group-significance(Kruskal-Wallis test) to compare alpha diversity across metadata groups. - Beta: Use

qiime diversity beta-group-significance(PERMANOVA viaqiime adonis) to test for significant differences in community composition between groups.

- Alpha: Use

- Visualization: Generate boxplots for alpha diversity and Principal Coordinates Analysis (PCoA) plots for beta diversity distance matrices.

Protocol 2: Analysis using R (phyloseq & vegan packages)

- Load Data: Import the feature table, taxonomy table, and sample metadata into a

phyloseqobject. - Rarefaction: Use

rarefy_even_depth()to standardize sequencing depth. - Alpha Diversity:

- Calculate indices:

estimate_richness()for non-phylogenetic metrics;pd()(picante package) for Faith's PD. - Visualize with

plot_richness(). - Statistically compare groups with

kruskal.test()orwilcox.test().

- Calculate indices:

- Beta Diversity:

- Calculate distance matrices:

distance()function inphyloseq(supports Bray-Curtis, UniFrac, etc.). - Perform ordination:

ordinate()for PCoA (e.g.,method="PCoA", distance="bray"). - Visualize with

plot_ordination(). - Perform PERMANOVA:

adonis2()from theveganpackage.

- Calculate distance matrices:

Key Alpha Diversity Indices

The table below summarizes commonly used alpha diversity indices, their sensitivity to richness/evenness, and typical interpretations.

Table 1: Common Alpha Diversity Indices in 16S rRNA Analysis

| Index Name | Category | Measures | Formula (Conceptual) | Interpretation |

|---|---|---|---|---|

| Observed Features (Richness) | Richness | Number of distinct ASVs/OTUs | S = Count of features | Simple measure of richness. Ignores abundances. |

| Chao1 | Richness (Estimator) | Estimated true richness, correcting for unseen species | Sest = Sobs + (F₁² / 2F₂) | Accounts for rare, low-abundance species. |

| Shannon Index | Diversity | Richness and evenness | H' = -Σ (pᵢ ln(pᵢ)) | Increases with both more species and more even abundances. Sensitive to changes in common species. |

| Faith's Phylogenetic Diversity | Phylogenetic Diversity | Total branch length of phylogenetic tree spanned by species in a sample | PD = Σ branch lengths | Incorporates evolutionary relationships; higher if taxa are phylogenetically dispersed. |

Key Beta Diversity Distance Metrics

The choice of beta diversity metric profoundly influences results. The table below compares prevalent measures.

Table 2: Common Beta Diversity/Distance Metrics in 16S rRNA Analysis

| Metric Name | Incorporates Abundance? | Incorporates Phylogeny? | Sensitivity | Best For |

|---|---|---|---|---|

| Jaccard Distance | No (Presence/Absence) | No | Community membership differences. | Detecting strong turnover events where species are gained/lost. |

| Bray-Curtis Dissimilarity | Yes | No | Abundance differences of common species. | Most general-purpose measure for ecological gradients. |

| Unweighted UniFrac | No (Presence/Absence) | Yes | Phylogenetic lineage presence/absence. | Detecting phylogenetic turnover, often more sensitive than Jaccard. |

| Weighted UniFrac | Yes | Yes | Abundance-weighted phylogenetic differences. | Detecting changes where abundant lineages shift phylogenetically. |

Title: Alpha & Beta Diversity Analysis Workflow

The Scientist's Toolkit: Research Reagent & Software Solutions

Table 3: Essential Tools for Diversity Analysis

| Item/Category | Primary Function | Example Tools/Packages |

|---|---|---|

| Bioinformatics Pipeline | End-to-end processing and analysis of raw sequences to generate diversity metrics. | QIIME 2, mothur, DADA2 (R) |

| Statistical Software | Advanced statistical testing, custom visualization, and flexible analysis. | R (with phyloseq, vegan, ggplot2), Python (with scikit-bio, pandas) |

| Phylogenetic Tree Builder | Generates the phylogenetic tree required for Faith's PD and UniFrac metrics. | FASTTREE, QIIME 2 qiime phylogeny align-to-tree-mafft-fasttree pipeline |

| Rarefied Feature Table | The core input data, where samples have been sub-sampled to an even depth. | Output from QIIME 2 qiime feature-table rarefy or R phyloseq::rarefy_even_depth() |

| Distance Matrix Calculator | Computes pairwise dissimilarity between all samples for beta diversity. | QIIME 2 qiime diversity beta, R vegan::vegdist() or phyloseq::distance() |

| Ordination & Visualization Tool | Reduces dimensionality of distance matrices for interpretation (e.g., PCoA). | QIIME 2 qiime diversity pcoa, R ape::pcoa() + ggplot2 |

| Statistical Testing Suite | Performs hypothesis testing on alpha and beta diversity results. | QIIME 2 qiime diversity adonis, R vegan::adonis2(), stats::kruskal.test() |

Within a foundational thesis on 16S rRNA sequencing data analysis, the final analytical stage transforms processed data into biologically interpretable insights. This step employs statistical testing to infer significant differences and visualization to communicate complex microbial community patterns.

Statistical Testing for Microbial Composition

PERMANOVA (Permutational Multivariate Analysis of Variance)

Purpose: Tests the null hypothesis that the centroids and dispersion of groups of microbial communities are equivalent under a chosen distance metric.

Detailed Protocol:

- Input: A sample-by-OTU/ASV count table and a sample metadata file with a grouping variable (e.g., Treatment vs. Control).

- Distance Matrix Calculation: Compute a beta-diversity matrix (e.g., Bray-Curtis, Unweighted UniFrac) from the normalized count data.

- Test Statistic Calculation: The pseudo-F statistic is calculated, analogous to the F-ratio in traditional ANOVA but based on the distance matrix.

- Permutation: Group labels are randomly permuted (e.g., 9999 times), and a pseudo-F statistic is recomputed for each permutation to generate a null distribution.

- Inference: The observed pseudo-F is compared to the null distribution to calculate a p-value.

Table 1: Interpretation of PERMANOVA Results

| Metric | Description | Typical Threshold |

|---|---|---|

| Pseudo-F (F model) | Ratio of among-group to within-group variance. Larger values suggest greater separation. | N/A |

| R² | Proportion of total variance explained by the grouping factor. | N/A |

| p-value | Probability that the observed group separation is due to chance. | < 0.05 |

| Permutations | Number of label shuffles used to build the null distribution. | ≥ 999 |

Key Consideration: A significant PERMANOVA result can be driven by differences in group location (centroid), dispersion, or both. A companion test for homogeneity of multivariate dispersions (e.g., betadisper in R) is essential.

ANCOM-BC (Analysis of Composition of Microbiomes with Bias Correction)

Purpose: Identifies differentially abundant taxa between groups while accounting for compositionality and sample-specific sampling fractions.

Detailed Protocol:

- Input: A raw sample-by-taxon count table and sample metadata.

- Bias Estimation: The model estimates two types of bias: the sampling fraction (systematic under-sampling) for each specimen and the taxon-specific bias (e.g., amplification efficiency).

- Log-Linear Model: Fits a linear model on the log-transformed observed abundances, correcting for the estimated biases:

log(observed_abundance) = β (differential abundance) + θ (sampling fraction) + ε (error). - Hypothesis Testing: Tests the null hypothesis that β = 0 (no differential abundance) for each taxon using a Wald test or similar.

- Multiple Correction: Applies a correction for false discovery rate (e.g., Benjamini-Hochberg) to the p-values.

Table 2: Comparison of Differential Abundance Methods

| Feature | ANCOM-BC | ANCOM (Original) | DESeq2 (adapted) |

|---|---|---|---|

| Core Model | Linear model with bias correction | Repeated Wilcoxon tests on log-ratios | Negative binomial generalized linear model |

| Output | Adjusted p-values, log-fold changes | W-statistic (frequency of significance) | Adjusted p-values, log2-fold changes |

| Handles Zeros | Yes (part of model) | Yes (via pairwise comparisons) | Yes (via regularization) |

| Key Strength | Quantifies effect size (abundance change) | Minimal assumptions on data distribution | High sensitivity for large effects |

| Primary Limitation | Assumes taxa are not globally differential | Conservative; no effect size estimate | Designed for RNA-seq; assumes most taxa not differential |

Essential Visualizations

Visualizations are critical for exploring the results of the above tests and the overall community structure.

A. Principal Coordinates Analysis (PCoA) Plot: Visualizes beta-diversity distance matrices (e.g., from Bray-Curtis). Samples colored by experimental group can be overlaid with PERMANOVA results.

B. Taxonomic Bar Plot: Displays the relative abundance of microbial taxa across samples or groups, often at the phylum or genus level.

C. Heatmap with Clustering: Shows the abundance of prevalent taxa across samples, clustered by similarity. Often annotated with sample metadata and differential abundance results.

D. Volcano Plot (for ANCOM-BC/DESeq2): Plots the log-fold change of each taxon against its statistical significance (-log10(p-value)), highlighting significantly differentially abundant taxa.

Diagram 1: Statistical & Visualization Workflow for 16S Data

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Statistical Analysis & Visualization

| Item | Function in Analysis | Example / Note |

|---|---|---|

| R Statistical Software | Primary environment for complex statistical analysis and high-quality graphics. | Use R ≥ 4.2.0. |

| Python (SciPy/NumPy) | Alternative environment for statistical computing and machine learning integration. | Jupyter notebooks facilitate interactive analysis. |

| QIIME 2 | Pipeline that wraps many statistical and visualization tools into a reproducible framework. | Includes q2-diversity for PERMANOVA. |

vegan R Package |

Core package for ecological multivariate analysis. Contains adonis2() for PERMANOVA. |

Essential for diversity analyses. |

ANCOMBC R Package |

Implements the ANCOM-BC method for differential abundance testing. | Preferred over original ANCOM for effect sizes. |

phyloseq R Package |

Data structure and toolkit for organizing and visualizing microbiome data. | Integrates seamlessly with vegan and ggplot2. |

ggplot2 R Package |

Declarative system for creating publication-quality visualizations. | The standard for static plots in R. |

| Distance Metrics | Quantify dissimilarity between microbial communities. | Bray-Curtis: Abundance-based. UniFrac: Phylogeny-aware. |

| Multiple Test Correction | Controls for false positives when testing hundreds of taxa. | Benjamini-Hochberg (FDR) is most common. |

| Publication-Color Palettes | Ensures visualizations are accessible to color-blind readers. | Use tools like ColorBrewer or viridis palette. |

Solving Common 16S Analysis Problems: A Troubleshooting Handbook for Reliable Data

Diagnosing and Fixing Poor Sequencing Yield or Low-Quality Reads

The reliable generation of high-quality 16S rRNA gene amplicon sequences is the foundational pillar for downstream microbiome analysis, a critical component in modern drug development and translational research. Within the broader thesis of 16S rRNA sequencing data analysis basics, understanding and rectifying issues of poor yield and low-quality reads is paramount. Compromised data at the sequencing stage irrevocably biases all subsequent analytical steps—from OTU clustering and taxonomic assignment to differential abundance testing and biomarker discovery—ultimately jeopardizing the validity of conclusions related to host-microbe interactions, therapeutic efficacy, and diagnostic potential.

Systematic Diagnosis of Common Issues

A structured diagnostic approach is essential to isolate the root cause. The following table summarizes primary failure modes, their symptoms, and initial diagnostic checks.

Table 1: Diagnostic Framework for Poor Yield and Low-Quality Reads

| Problem Category | Key Symptoms | Potential Root Cause | Immediate Diagnostic Check |

|---|---|---|---|

| Low Library Yield | Low concentration post-PCR, faint/no bands on gel. | Inhibitors in genomic DNA, inefficient primer binding, degraded template. | Check gDNA purity (A260/A280, A260/A230), verify primer compatibility, run aliquot on bioanalyzer. |

| Low Cluster Density | Low PF clusters reported by sequencer. | Under-quantified library, poor library diversity, flow cell defect. | Re-quantify library with fluorometry (Qubit), check library size profile, review sequencer dashboard. |

| High % Phasing/Prephasing | Rapid drop in quality scores after read 1. | Poor cluster amplification, damaged flow cell, unbalanced nucleotides. | Review sequencer's cycle-specific intensity plots. |

| High Index Hopping/Multiplexing Issues | High percentage of reads in Undetermined FASTQ. | Low complexity libraries, unbalanced index molarity, cross-contamination. | Demultiplex with strict mismatch settings; inspect index hopping rate. |

| Low Q-Scores ( |

High per-base error rate, poor data quality. | Contaminated reagents, damaged flow cell, suboptimal cluster generation. | Examine inter-cycle metrics, perform control library run. |

| Adapter Dimer Contamination | Sharp peak ~120bp in library profile. | Over-amplification, insufficient cleanup post-PCR. | Analyze library on High Sensitivity Bioanalyzer or TapeStation. |

Detailed Experimental Protocols for Troubleshooting

Protocol: Assessment of Input Genomic DNA Quality

Purpose: To rule out sample-derived issues as the cause of poor library preparation yield. Materials: Isolated gDNA from samples, spectrophotometer (NanoDrop) or fluorometer (Qubit), gel electrophoresis system. Procedure:

- Quantification: Measure DNA concentration using both UV absorbance (NanoDrop) and dsDNA-specific fluorescent assay (Qubit). Compare values; significant discrepancies suggest contamination.

- Purity Assessment: Record A260/A280 and A260/A230 ratios. Optimal ranges are 1.8-2.0 and 2.0-2.2, respectively. Low A260/A230 indicates carryover of salts or organic compounds.

- Integrity Check: Perform agarose gel electrophoresis (1% gel). For 16S work, intact gDNA should appear as a high-molecular-weight band. Smearing indicates degradation.

- PCR Inhibition Test: Perform a standardized 16S PCR with a positive control (known good gDNA) spiked with a dilution of the test sample. Compare amplification efficiency.

Protocol: Optimization of Library Preparation PCR

Purpose: To maximize library yield while minimizing chimera formation and dimer artifacts. Materials: High-fidelity polymerase master mix, validated 16S primer set (e.g., 341F/806R for V3-V4), template gDNA, magnetic bead cleanup system. Procedure:

- Cycle Titration: Set up identical PCR reactions varying only the cycle number (e.g., 25, 28, 30, 35 cycles). Use a mid-range template amount (1-10ng).

- Post-PCR Analysis: Run 5µL from each reaction on a high-sensitivity bioanalyzer chip.

- Evaluation: Identify the cycle number that produces sufficient yield (e.g., >2nM) without a prominent adapter-dimer peak (~120bp). This is the optimal cycle.

- Cleanup: Perform a double-sided size selection using magnetic beads (e.g., 0.8X and 0.9X bead ratios) to rigorously exclude primer dimers.

Protocol: Quantification and Normalization for Pooling

Purpose: To ensure equimolar pooling of libraries, preventing data skew and low diversity. Materials: Pooled library, Qubit Fluorometer, High Sensitivity D1000 TapeStation/ Bioanalyzer. Procedure:

- Accurate Quantification: Quantify the purified library using Qubit (dsDNA HS assay). Do not rely solely on NanoDrop.

- Size Determination: Analyze 1µL of library on a High Sensitivity TapeStation to determine the average fragment size.

- Molarity Calculation: Calculate library molarity (nM) using the formula:

[Molarity] = (Concentration in ng/µL * 10^6) / (Average Size bp * 650). - Pooling: Dilute each library to 4nM based on the calculated molarity, then combine equal volumes for the final pool. Re-quantify the pool before loading onto the sequencer.

Visualizing the Diagnostic Workflow

Diagram Title: Diagnostic Decision Tree for Sequencing Issues

The Scientist's Toolkit: Key Reagent Solutions