Power Analysis and Sample Size Calculation in Microbiome Studies: A Practical Guide for Researchers

This article provides a comprehensive guide for researchers and drug development professionals on performing statistically sound power and sample size calculations for microbiome studies.

Power Analysis and Sample Size Calculation in Microbiome Studies: A Practical Guide for Researchers

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on performing statistically sound power and sample size calculations for microbiome studies. Covering foundational concepts, specific methodologies for alpha and beta diversity analysis, practical software tools, and strategies for optimizing study design, this resource synthesizes current best practices. It addresses common pitfalls, compares parametric and non-parametric approaches, and demonstrates how to leverage large public databases for effect size estimation to ensure studies are adequately powered to detect biologically meaningful effects, thereby improving the reliability and reproducibility of microbiome research.

Why Power Matters: Core Concepts for Robust Microbiome Study Design

Defining Power, Sample Size, and Error Rates (Type I and II) in a Microbiome Context

Frequently Asked Questions (FAQs)

1. What are Type I and Type II errors in the context of microbiome studies?

In hypothesis testing for microbiome research, you make a choice between two statistical truths based on your data. A Type I error (false positive) occurs when you incorrectly reject the null hypothesis, concluding that a taxon is differentially abundant or that community structures are different when they are not. The probability of committing a Type I error is denoted by α (alpha) and is typically set at 0.05 [1]. A Type II error (false negative) occurs when you incorrectly fail to reject the null hypothesis, missing a true biological difference. The probability of a Type II error is denoted by β (beta). The power of a statistical test, defined as 1 - β, is the probability of correctly rejecting the null hypothesis when it is false [1]. Most studies aim for a power of 0.8 (or 80%).

2. Why is power analysis particularly challenging for microbiome data?

Microbiome data possess intrinsic characteristics that complicate statistical analysis and power calculation [2]. These challenges are summarized in the table below.

Table 1: Key Challenges in Microbiome Power Analysis

| Challenge | Description |

|---|---|

| Zero Inflation | A large proportion (often 80-95%) of data points are zeros, arising from both biological absence and technical limitations [3]. |

| Compositionality | Sequencing data provide only relative abundances, not absolute counts, making relationships between taxa dependent [4] [2]. |

| High Dimensionality | The number of taxa (p) is much larger than the number of samples (n), a scenario known as "p >> n" [2]. |

| Overdispersion | The variance in the data is often much higher than the mean, violating assumptions of standard statistical models [3]. |

| Metric Sensitivity | The choice of alpha or beta diversity metric can significantly influence the resulting statistical power and sample size estimates [1]. |

3. How does the choice of diversity metric affect my power calculations?

The metric you choose to quantify differences directly influences the effect size and, consequently, the required sample size.

- Alpha Diversity metrics (within-sample diversity), such as Shannon index or Faith's PD, summarize the structure of a microbial community. When using these, the problem becomes a univariate test (e.g., t-test, ANOVA), and effect sizes like Cohen's d (for two groups) or Cohen's f (for multiple groups) can be used for power analysis [1] [5].

- Beta Diversity metrics (between-sample diversity), such as Bray-Curtis or UniFrac, quantify how different microbial communities are from each other. These require multivariate statistical tests like PERMANOVA [6]. For power analysis, the effect size is often quantified by the omega-squared (ω²) statistic, which measures the proportion of variance explained by the grouping factor [6]. Studies have shown that beta diversity metrics are often more sensitive for detecting differences between groups than alpha diversity metrics [1].

Table 2: Common Diversity Metrics and Their Use in Power Analysis

| Diversity Type | Common Metrics | Typical Statistical Test | Relevant Effect Size |

|---|---|---|---|

| Alpha Diversity | Shannon, Faith's PD, Observed ASVs [1] | t-test, ANOVA | Cohen's d, Cohen's f [5] |

| Beta Diversity | Bray-Curtis, UniFrac (weighted/unweighted), Jaccard [6] [1] | PERMANOVA [6] | Omega-squared (ω²) [6] |

4. I am planning to use differential abundance (DA) testing. What should I know about power?

Numerous DA methods exist, and they can produce vastly different results from the same dataset [4]. Benchmarking studies have found that methods like ALDEx2 and ANCOM-II tend to be more conservative but produce more consistent results, while methods like limma-voom and Wilcoxon on CLR-transformed data may identify more significant taxa but can have inflated false discovery rates in some situations [4]. The presence of group-wise structured zeros (a taxon is absent in all samples of one group but present in the other) poses a major challenge, as many standard DA methods fail or lose power with such data [3]. It is recommended to use a consensus approach based on multiple DA methods to ensure robust biological interpretations [4].

Troubleshooting Guides

Problem: Inconsistent or Underpowered Results in Differential Abundance Analysis

Possible Causes and Solutions:

- Cause: The dataset contains a high proportion of zeros, reducing statistical power.

- Solution: Consider using DA methods designed for zero-inflated data, such as the weighted versions of common tools (DESeq2-ZINBWaVE, edgeR-ZINBWaVE) [3]. Implement a prevalence filter (e.g., removing taxa present in less than 10% of samples) to reduce noise, but ensure this filtering is independent of the test statistic [4].

- Cause: Group-wise structured zeros are causing model failures.

- Solution: For taxa that are completely absent in one group, a method like DESeq2 with its built-in penalized likelihood estimation can help provide finite parameter estimates [3]. Some researchers manually flag these taxa for separate consideration.

- Cause: The chosen DA method is not appropriate for your data's distribution.

- Solution: Do not rely on a single DA method. Run multiple methods from different families (e.g., a count-based method like DESeq2, a compositionally-aware method like ALDEx2, and a non-parametric method) and look for a consensus in the results [4].

Problem: How to Determine Sample Size for a New Microbiome Study

Solution: Follow this step-by-step workflow for power and sample size estimation.

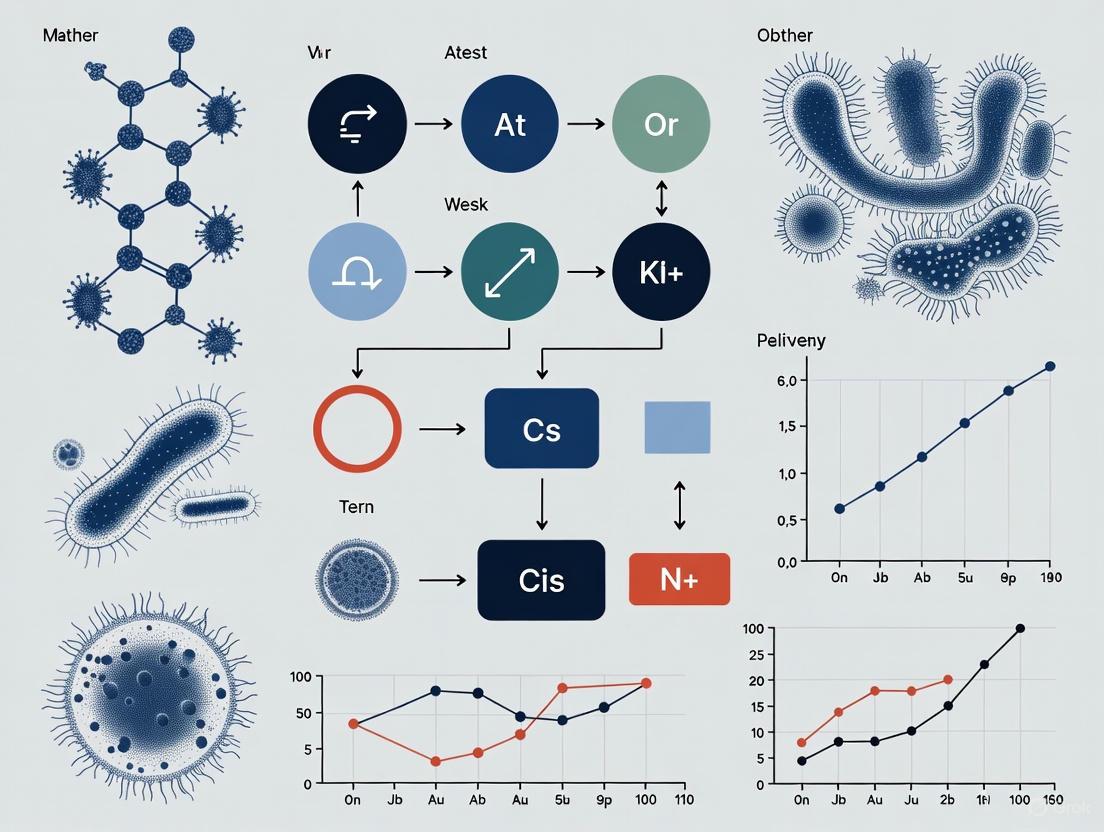

Diagram 1: Sample size estimation workflow.

Detailed Protocol for Sample Size Estimation:

- Define Your Hypothesis and Primary Outcome: Decide whether you are testing for a difference in alpha diversity, beta diversity, or specific differentially abundant taxa. This determines the statistical test and the required effect size [6] [1].

- Obtain an Estimate of the Effect Size:

- Ideal: Use data from a pilot study. Calculate the relevant effect size (e.g., Cohen's d for alpha diversity, ω² for beta diversity) from your own preliminary data [5].

- Alternative: Use existing large public datasets (e.g., American Gut Project, FINRISK) and software like Evident to mine for effect sizes of metadata variables similar to your grouping factor of interest [5].

- Set Statistical Parameters: Define your acceptable Type I error rate (α, typically 0.05) and desired statistical power (1-β, typically 0.8 or 0.9) [1].

- Perform the Calculation:

- For univariate outcomes (e.g., Alpha Diversity): Use standard power analysis functions (e.g., in R or Python) with the effect size (Cohen's d/f), α, and power to solve for the required sample size [5].

- For multivariate outcomes (e.g., Beta Diversity with PERMANOVA): Use simulation-based tools. The micropower R package, for example, allows you to simulate distance matrices based on pre-specified population parameters and effect sizes to estimate power for a given sample size [6].

- Iterate and Report: Calculate sample sizes for a range of plausible effect sizes to create a power curve. In your publications, clearly report the parameters used for your power analysis to enhance reproducibility.

Table 3: Key Software and Analytical Tools for Power Analysis

| Tool Name | Function | Application Context |

|---|---|---|

| Evident [7] [5] | Calculates effect sizes for multiple metadata categories and performs power analysis for both univariate and multivariate data. | Ideal for exploring large datasets to plan new studies; integrates with QIIME 2. |

| micropower [6] [8] | Simulation-based power estimation for studies analyzed with pairwise distances (e.g., UniFrac, Jaccard) and PERMANOVA. | Essential for power analysis in beta diversity-based study designs. |

| DESeq2 [3] | A popular method for differential abundance testing that uses a negative binomial model. | The standard for count-based DA analysis; can handle some group-wise structured zeros. |

| ALDEx2 [4] | A compositional data analysis tool that uses a centered log-ratio (CLR) transformation. | Recommended for conservative and reproducible DA results; handles compositionality. |

| ZINB-WaVE [3] | A method that provides observation-level weights to account for zero inflation. | Can be used to create weighted versions of DESeq2, edgeR, and limma-voom to improve their performance on sparse data. |

Understanding the Central Role of Effect Size in Determining Statistical Power

Frequently Asked Questions (FAQs)

1. What is effect size and why is it critical for power analysis in microbiome studies?

Effect size quantifies the magnitude of a biological effect or the strength of a relationship between variables. In power analysis, it is the key parameter that, along with sample size, significance level (α, usually 0.05), and desired statistical power (1-β, often 0.8 or 80%), determines whether a study can reliably detect a true effect [9] [1]. For microbiome studies, calculating power is complex because common parameters like alpha and beta diversity are nonlinear functions of microbial relative abundances, and pilot studies often yield biased estimates due to data sparsity and numerous zero counts [10]. A larger effect size reduces the number of samples needed for high statistical power [10].

2. How do I determine an appropriate effect size for my microbiome study?

Using large, existing microbiome databases is a powerful strategy. Tools like Evident can mine databases (e.g., American Gut Project, FINRISK, TEDDY) to derive effect sizes for your specific metadata variables (e.g., mode of birth, antibiotic use) and microbiome metrics (e.g., α-diversity) [10]. Alternatively, you can use estimates from comparable published studies or meta-analyses, or conduct a small pilot study [11]. The effect size should represent a biologically meaningful change, such as a predetermined difference in Shannon entropy or a specific log-ratio change in taxon abundance [1] [11].

3. My pilot data has many zeros and seems unreliable. How can I get a stable effect size estimate?

This is a common challenge. Small pilot studies (N < 100) often produce unstable effect size estimates due to the sparse and zero-inflated nature of microbiome count data [10]. The recommended solution is to leverage large, public microbiome datasets that contain thousands of samples and hundreds of metadata variables [10]. The large sample sizes in these resources provide stable, reliable estimates of population parameters (mean and variance) for your metric of interest, which are necessary for robust effect size calculation [10].

4. How does the choice of diversity metric influence my power analysis?

The choice of alpha or beta diversity metric significantly impacts the calculated effect size and subsequent sample size requirements [1]. Different metrics have varying sensitivities to detect differences. For example, beta diversity metrics like Bray-Curtis are often more sensitive to group differences than alpha diversity metrics [1]. Furthermore, the temporal stability (and thus statistical power) of these metrics varies; for instance, intraclass correlation coefficients (ICCs) for fecal microbiome diversity over six months are generally low (ICC < 0.6), indicating substantial variability that requires larger sample sizes [12]. You should base your power analysis on the specific metric that aligns with your primary research question.

5. For a case-control study, how many samples do I typically need?

Required sample sizes can be very large, especially for detecting associations with specific microbial species, genes, or pathways. One study estimated that for an odds ratio of 1.5 per standard deviation increase, a 1:1 case-control study requires approximately [12]:

- 3,527 cases for high-prevalence species

- 15,102 cases for low-prevalence species Using multiple specimens per participant or a higher control-to-case ratio (e.g., 1:3) can substantially reduce the number of required cases [12].

Troubleshooting Guides

Problem: Inconsistent or Underpowered Results in Microbiome Analysis

| Symptom | Potential Cause | Solution |

|---|---|---|

| Failing to find significant differences in microbiome studies, despite a strong biological hypothesis. | 1. Effect size was overestimated. 2. Within-group variance was underestimated. 3. An inappropriate or low-sensitivity diversity metric was used. | 1. Use the Evident tool with large public databases to obtain realistic effect sizes [10]. 2. Use variance estimates from large-scale studies or meta-analyses that report ICCs [12]. 3. Consider using multiple diversity metrics, with a pre-specified primary metric, and report results for all [1]. |

| A collaborator or reviewer questions your sample size justification. | A priori power analysis was not performed, or the parameters used were not justified. | Perform and document a power analysis before starting the experiment. Justify your chosen effect size with literature, pilot data, or database mining. Specify the alpha, power, and statistical test [9] [11]. |

| Different microbial signatures are identified every time the analysis is run with slight changes. | The study is underpowered for detecting stable associations, especially with rare taxa. | Increase sample size based on a power analysis for rare features. For meta-analyses, use compositionally-aware methods like Melody that are designed to identify stable, generalizable signatures [13]. |

Essential Experimental Protocols

Protocol 1: Using the Evident Tool for Effect Size and Power Calculation

Purpose: To derive data-driven effect sizes from large microbiome databases for robust sample size calculation.

Workflow:

Steps:

- Define Parameters: Identify your metadata variable of interest (e.g., binary: disease vs. healthy) and your primary microbiome outcome metric (e.g., α-diversity like Shannon entropy, or β-diversity) [10].

- Prepare Input Data: Format your data or select the appropriate large-scale database within

Evident. The input consists of a sample metadata file and a data file of interest (e.g., a vector of α-diversity values for each sample) [10]. - Calculate Effect Size:

Evidentcomputes the effect size by comparing the means of the two groups. For a binary category, it uses Cohen's d: ( d = \frac{|μ1 - μ2|}{σ{pooled}} ) where (μ) is the mean diversity per group and (σ{pooled}) is the pooled standard deviation [10] [1]. - Perform Power Analysis: Use the calculated effect size in a power analysis simulation.

Evidentcan dynamically generate power curves, allowing you to identify the "elbow" or optimal sample size to achieve your desired statistical power (e.g., 80%) [10].

Protocol 2: Power Analysis for a Case-Control Study Using Temporal Stability Data

Purpose: To calculate sample size for a case-control study accounting for the temporal variability of the human microbiome.

Workflow:

Steps:

- Assess Temporal Variability: From a longitudinal pilot study, calculate the Intraclass Correlation Coefficient (ICC) for your microbiome metric of interest. The ICC quantifies how much of the total variance is due to between-subject differences versus within-subject fluctuations over time. A low ICC (<0.5) indicates poor reliability and necessitates a larger sample size [12].

- Define Statistical Parameters: Set the odds ratio (OR) you wish to detect, the significance level (α), the desired statistical power (1-β), and the ratio of controls to cases [12].

- Incorporate Sampling Strategy: The model should account for the number of fecal specimens collected per participant to better estimate the "long-term" average microbiome exposure. Using multiple samples per subject can significantly reduce the required number of cases [12].

- Calculate Sample Size: Using a logistic regression model that incorporates the ICC and the number of samples per subject, estimate the total number of cases needed for your study [12].

The Scientist's Toolkit: Key Research Reagent Solutions

| Tool / Resource | Function | Relevance to Power Analysis |

|---|---|---|

| Evident [10] | A software tool (Python package/QIIME 2 plugin) that uses large databases to calculate effect sizes for dozens of metadata categories. | Directly addresses the core challenge of determining a realistic effect size for planning future studies via power analysis. |

| Large Public Databases (e.g., American Gut Project, FINRISK, TEDDY) [10] | Provide microbiome data from thousands of samples, enabling stable estimation of population parameters (mean and variance). | Serve as the foundational data source for tools like Evident to derive reliable effect sizes, overcoming the limitations of small pilot studies. |

| Melody [13] | A summary-data meta-analysis framework for discovering generalizable microbial signatures, accounting for compositional data structure. | Helps validate and identify robust signatures from multiple studies, informing effect size expectations for future research. |

| Intraclass Correlation Coefficient (ICC) [12] | A metric to quantify the temporal stability of a microbiome feature within individuals over time. | Critical for power calculations in longitudinal or nested case-control studies, as low ICC requires larger sample sizes. |

| MMUPHin [14] [13] | An R package that provides methods for batch effect correction and meta-analysis of microbiome data. | Enables the combined analysis of multiple cohorts to increase sample size and power for discovering robust associations. |

Frequently Asked Questions

FAQ 1: What are the core alpha diversity metrics I should report, and what does each one tell me? Alpha diversity metrics describe the within-sample diversity and capture different aspects of the microbial community. Reporting a set of metrics that cover richness, evenness, and phylogenetics is recommended for a comprehensive analysis [15]. The table below summarizes key metrics and their interpretations.

Table: Essential Alpha Diversity Metrics and Their Interpretations

| Metric | Category | What It Measures | Biological Interpretation |

|---|---|---|---|

| Observed Features [16] | Richness | The simple count of unique Amplicon Sequence Variants (ASVs) or Operational Taxonomic Units (OTUs). | The total number of distinct taxa detected in a sample. |

| Chao1 [17] [15] | Richness | Estimates the true species richness by accounting for undetected rare species, using singletons and doubletons. | An estimate of the total taxonomic richness, including rare species that might have been missed by sequencing. |

| Shannon Index [17] | Information | Combines richness and the evenness of species abundances. | Higher values indicate a more diverse and balanced community. Sensitive to changes in rare taxa. |

| Simpson Index [17] [15] | Dominance | Measures the probability that two randomly selected individuals belong to the same species. Emphasizes dominant species. | Higher values indicate lower diversity, as one or a few species dominate the community. |

| Faith's PD [16] [15] | Phylogenetics | The sum of the branch lengths of the phylogenetic tree spanning all taxa in a sample. | Measures the amount of evolutionary history present in a sample. Incorporates phylogenetic relationships between taxa. |

FAQ 2: Which beta diversity metric should I choose for my study? The choice of beta diversity metric depends on whether you want to focus on microbial abundances and if you wish to incorporate phylogenetic information. The structure of your data influences which metric is most sensitive for detecting differences [1].

Table: Comparison of Common Beta Diversity Metrics

| Metric | Incorporates Abundance? | Incorporates Phylogeny? | Best Use Case |

|---|---|---|---|

| Bray-Curtis [1] | Yes (Quantitative) | No | General-purpose dissimilarity; sensitive to changes in abundant taxa. Often identified as one of the most sensitive metrics for observing differences between groups [1]. |

| Jaccard [1] | No (Presence/Absence) | No | Focuses on shared and unique taxa, ignoring their abundance. |

| Weighted UniFrac [1] | Yes (Quantitative) | Yes | Measures community dissimilarity by considering the relative abundance of taxa and their evolutionary distances. |

| Unweighted UniFrac [1] | No (Presence/Absence) | Yes | Measures community dissimilarity based on the presence/absence of taxa and their evolutionary distances. |

FAQ 3: How do I visually represent taxonomic abundance data? The best plot for taxonomic abundance depends on whether you are comparing individual samples or groups.

- For comparing groups: A bar plot is the most common and easily interpretable method. It shows the mean or median relative abundance of taxa across samples within a group [18] [19]. For a more dynamic view at the group level, a bubble plot can be used, where bubble size represents abundance [18].

- For comparing all samples: A heatmap is more suitable. It displays the abundance of taxa (rows) in each sample (column) using a color scale and is often combined with clustering to show sample similarities [18].

FAQ 4: What are the critical considerations for low-biomass microbiome studies? Low-biomass samples (e.g., from human tissue, blood, or clean environments) are highly susceptible to contamination, which can lead to spurious results. Key considerations include [20]:

- Stringent Controls: Actively incorporate negative controls at the DNA extraction and sequencing stages. These are crucial for identifying contaminating DNA.

- Rigorous Decontamination: Decontaminate equipment and workspaces with solutions that destroy DNA (e.g., bleach, UV irradiation), not just ethanol [20].

- Use of PPE: Use personal protective equipment (PPE) like gloves, masks, and clean suits to minimize contamination from researchers [20].

- Post-Hoc Decontamination: Use bioinformatic tools to identify and remove contaminants identified in your negative controls from your sample data.

FAQ 5: How is power analysis different for microbiome data? Standard sample size calculations are often not directly applicable to microbiome data due to its high dimensionality, sparsity, and non-normal distribution. Hypothesis tests for beta diversity, for example, rely on permutation-based methods (e.g., PERMANOVA) rather than traditional parametric tests [1]. Therefore, performing a priori power and sample size calculations that consider the specific features of microbiome datasets is crucial for obtaining valid and reliable conclusions [21].

Experimental Protocols & Workflows

Protocol 1: Standard Workflow for 16S rRNA Data Analysis This protocol outlines the key steps from raw sequences to diversity analysis, which is fundamental for calculating alpha and beta diversity.

Protocol 2: A Framework for Sample Size Determination This protocol describes the iterative process for determining an adequate sample size, a critical step often overlooked in microbiome studies [21].

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Materials and Tools for Microbiome Diversity Analysis

| Item | Function | Example Tools / Kits |

|---|---|---|

| DNA Extraction Kit | Isolates total genomic DNA from samples. Critical for low-biomass work: use kits certified DNA-free. | Various commercially available kits, preferably with pre-treatment to remove contaminating DNA [20]. |

| 16S rRNA Primer Set | Amplifies the target hypervariable region for amplicon sequencing. | 515F/806R (V4), 27F/338R (V1-V2); choice affects richness estimates [15]. |

| Bioinformatics Pipeline | Processes raw sequences into analyzed data: quality control, denoising, taxonomy assignment. | QIIME 2 [16], mothur. |

| Statistical Software | Performs diversity calculations, statistical testing, and data visualization. | R (with packages like phyloseq, vegan), Python. |

| Reference Database | Provides curated sequences for taxonomic classification of ASVs. | SILVA, Greengenes [16], NCBI RefSeq. |

Data Interpretation and Troubleshooting

Troubleshooting Guide: Common Issues with Diversity Metrics

| Problem | Potential Cause | Solution |

|---|---|---|

| Inconsistent results between alpha diversity metrics. | Different metrics measure different aspects (richness vs. evenness) [15]. | Report a suite of metrics from different categories (see FAQ 1) rather than relying on a single one. |

| No significant difference found in beta diversity. | The study may be underpowered [1]. | Perform a power analysis on a pilot dataset before the main study. Consider if the chosen metric (e.g., Bray-Curtis vs. UniFrac) is appropriate for your biological question [1]. |

| Taxonomic abundance bar plots are dominated by rare taxa, making patterns hard to see. | Plotting all taxa, including very low-abundance ones, leads to visual clutter [18]. | Aggregate rare taxa into an "Other" category or focus visualization on the top N most abundant taxa. |

| Unexpected taxa appear in negative controls or low-biomass samples. | Contamination from reagents, kits, or the laboratory environment [20]. | Include and sequence negative controls. Use bioinformatic contamination-removal tools and report all contamination control steps taken [20]. |

The Critical Impact of Microbial Community Variability on Power Calculations

Frequently Asked Questions (FAQs)

FAQ 1: What are the most critical sources of variability I must account for in microbiome power calculations? Microbiome data has several intrinsic properties that directly impact variability and, consequently, power. The most critical sources to consider are:

- Within-Group Heterogeneity: The natural variation in microbial community composition between different subjects in the same treatment or exposure group. This is a primary driver of the within-group sum of squares in PERMANOVA and must be accurately modeled [6].

- Compositionality and Sparsity: Microbiome data consists of counts that are relative to each other (compositional) and contain many zeros (sparse) due to many low-abundance or rare taxa not being detected [22] [23]. This structure violates assumptions of many standard statistical tests.

- Sampling Depth: The total number of sequences obtained per sample influences the observed diversity. A lower sequencing depth may fail to detect rare species, leading to an underestimation of true diversity and inflated perceived differences between samples [24].

FAQ 2: How does the choice of beta-diversity metric influence my power analysis? The choice of a beta-diversity metric (e.g., UniFrac, Jaccard, Bray-Curtis) directly shapes your power analysis because each metric captures different aspects of community difference [6] [23].

- Phylogenetic vs. Non-Phylogenetic: Weighted and unweighted UniFrac incorporate phylogenetic relationships between taxa, while Jaccard and Bray-Curtis do not [6]. A metric sensitive to the specific community aspects your intervention affects will increase power.

- Abundance-Weighting: Unweighted metrics (presence/absence) are sensitive to rare taxa, while weighted metrics also consider taxon abundance [6]. The expected nature of the change in your study (loss of rare species vs. shift in dominant species) should guide your choice. Power is reduced if the chosen metric is unresponsive to the actual biological effect.

FAQ 3: I have pilot data. What is the most robust method for performing a power analysis for a PERMANOVA test? A simulation-based approach using your pilot data is widely recommended for its robustness [6] [23]. The general workflow involves:

- Characterize Pilot Data: Use your pilot data to estimate key population parameters, such as the within-group pairwise distance distribution and the expected effect size (e.g., omega-squared, ω²) [6].

- Simulate Distance Matrices: Generate simulated distance matrices that reflect the pre-specified within-group variability and incorporate the effect size of the grouping factor you wish to detect [6].

- Run PERMANOVA on Simulations: Repeatedly perform PERMANOVA tests on the simulated distance matrices for various sample sizes.

- Estimate Power: Calculate power as the proportion of these tests that correctly reject the null hypothesis at your chosen significance level (e.g., α = 0.05) [6].

FAQ 4: When should I use rarefaction, and how does it affect power? Rarefaction (subsampling to an even sequencing depth) is a common method to correct for uneven library sizes before diversity analysis [24].

- When to Use: It is beneficial when library sizes vary greatly (e.g., more than a ~10x difference) [24]. Using rarefaction ensures that differences in diversity are not artifacts of sequencing effort.

- Impact on Power: Rarefaction necessarily discards data, which can reduce statistical power. The key is to choose a rarefaction depth that retains as many samples as possible while ensuring diversity estimates have stabilized, as shown by an alpha rarefaction curve [24]. You must balance the loss of samples against the benefit of controlled variation.

Troubleshooting Guides

Problem: Consistually Low Power in PERMANOVA-Based Power Analysis

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| High within-group variability | Calculate and inspect the distribution of pairwise distances within pilot groups. Compare to between-group distances. | Increase the sample size to better characterize and account for natural variation. If feasible, refine inclusion criteria to create more homogeneous groups. [6] |

| Small effect size | Calculate the omega-squared (ω²) from pilot data or literature. This provides a less-biased estimate of effect size than R². [6] | Re-evaluate the experimental design to see if the intervention can be intensified, or focus on detecting a larger, more biologically relevant effect. |

| Inappropriate distance metric | Check if the chosen metric (e.g., unweighted UniFrac) aligns with the expected biological effect (e.g., a shift in dominant species). | Test power calculations with alternative metrics (e.g., weighted UniFrac, Bray-Curtis) to see if another metric is more powerful for your specific hypothesis. [6] [23] |

Problem: Inconsistent Power Estimates Across Simulation Runs

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Insufficient number of permutations or simulations | Observe the standard deviation of power estimates across multiple independent runs of your power analysis script. | Drastically increase the number of permutations in each PERMANOVA test and the number of simulated datasets for each sample size condition. This stabilizes the estimates. [6] |

| Pilot data is too small or unrepresentative | Check the sample size of your pilot data. Use resampling to see how stable the community parameters are. | Use tools that employ Dirichlet Mixture Models (DMM) to simulate more robust community data from small pilot sets, or seek a larger, comparable public dataset for parameter estimation. [23] |

Experimental Protocols for Power Analysis

Protocol 1: Simulation-Based Power Analysis for PERMANOVA

This protocol outlines a method for estimating power for a microbiome study that will be analyzed using pairwise distances and PERMANOVA [6].

1. Define Population Parameters from Pilot Data:

- Input: A distance matrix derived from pilot 16S rRNA sequencing data.

- Calculation: For each group in your pilot data, calculate the within-group sum of squares (SSW) and total sum of squares (SST). Estimate the effect size using the adjusted coefficient of determination, omega-squared (ω²) [6]:

ω² = [SSA - (a-1) * (SSW/(N-a))] / [SST + (SSW/(N-a))]- Where

ais the number of groups,Nis the total sample size, andSSA(between-group sum of squares) isSST - SSW.

2. Simulate Distance Matrices:

- Use a framework that can simulate distance matrices satisfying the triangle inequality and incorporating group-level effects.

- Model within-group pairwise distances based on the parameters estimated in Step 1.

- Introduce a simulated effect between groups based on your target ω² value.

3. Estimate Power via Simulation:

- For a range of sample sizes (n), repeatedly generate simulated distance matrices.

- For each simulated matrix, run PERMANOVA and record the p-value.

- Calculate empirical power for that sample size

nas:Power = (Number of PERMANOVA tests with p-value < α) / (Total number of simulations)

Protocol 2: Power Analysis Using Differential Abundance Taxa

This protocol uses a tool like MPrESS to focus power analysis on the most discriminatory taxa, which can increase power for detecting specific effects [23].

1. Identify Discriminatory Taxa:

- Input: An OTU/ASV table and associated metadata from pilot data.

- Analysis: Use a differential abundance tool like DESeq2 on the pilot data to identify taxa that are significantly different between the groups of interest. Apply a False Discovery Rate (FDR) correction.

2. Perform Power Calculation on Filtered Data:

- Trim the OTU table to include only the significant discriminatory taxa identified in Step 1.

- Use a power calculation tool that can either:

- Subsample from the filtered pilot data via random sampling without replacement.

- Simulate new OTU tables based on the Dirichlet Multinomial Mixture (DMM) model of the filtered data, especially if the required sample size is larger than the available pilot data [23].

3. Execute PERMANOVA and Compute Power:

- For each subsampled or simulated OTU table, compute the desired beta-diversity distance metric.

- Run PERMANOVA on the resulting distance matrix.

- Calculate power as the proportion of significant tests, as described in Protocol 1.

Workflow and Relationship Diagrams

Power Analysis Decision Workflow

Microbial Community Variability Impact on Power

Research Reagent Solutions

| Tool/Package Name | Primary Function | Brief Explanation |

|---|---|---|

| micropower [6] | Power analysis for PERMANOVA | An R package that simulates distance matrices to estimate power for microbiome studies analyzed with PERMANOVA. |

| MPrESS [23] | Power and sample size estimation | An R package that uses Dirichlet Mixture Models (DMM) and subsampling to calculate power, with the option to focus on discriminatory taxa. |

| QIIME 2 [24] | Microbiome data processing and analysis | A powerful, extensible platform for performing end-to-end microbiome analysis, including diversity calculations and rarefaction. |

| MicrobiomeAnalyst [25] | Comprehensive statistical analysis | A user-friendly web-based platform for statistical, visual, and functional analysis of microbiome data. |

| PERMANOVA | Hypothesis testing | A non-parametric statistical test used to compare groups of microbial communities based on a distance matrix. [6] |

| DESeq2 [23] | Differential abundance analysis | An R package used to identify specific taxa that are significantly different between groups, which can be used to focus power calculations. |

| Dirichlet Mixture Model (DMM) [23] | Data simulation | A statistical model used to simulate new, realistic OTU tables based on the structure of existing pilot data for power analysis. |

Frequently Asked Questions

Why is power analysis uniquely important for microbiome studies? Microbiome data have intrinsic features that do not apply to classic sample size calculations, such as high dimensionality and compositionality. Proper power analysis is therefore crucial to obtain valid, generalizable conclusions and is a key factor in improving the quality and reliability of human or animal microbiome studies [26].

What are the common pitfalls in microbiome study design that affect power? Two major pitfalls are incorrect effect size estimation from pilot data and ignoring measurement error. Different alpha and beta diversity metrics can lead to vastly different sample size calculations. Furthermore, sample processing errors, like mislabeled samples, are frequent and can invalidate results if not detected [27] [1].

My sample size is fixed. What can I do to maximize my study's power? With a fixed sample size, you can maximize power by:

- Choosing sensitive metrics: Beta diversity metrics, particularly Bray-Curtis dissimilarity, are often more sensitive for detecting differences between groups than alpha diversity metrics [1].

- Optimizing protocols: Use experimental designs and statistical models that can estimate and correct for species-specific detection effects and cross-sample contamination, which reduces noise and increases true effect sizes [28].

- Pre-registering analysis plans: Decide on your primary outcome metrics (e.g., Bray-Curtis for beta diversity) before the experiment begins to avoid p-value hacking and ensure your limited power is used effectively [1].

How can I check for sample processing errors in my data? For studies with host-associated microbiomes, you can use host DNA profiled via metagenomic sequencing. By comparing host Single Nucleotide Polymorphisms (SNPs) inferred from the microbiome data to independently obtained genotypes (e.g., from microarray data), you can identify sample mix-ups or mislabeling [27].

Troubleshooting Guides

Problem: Inconsistent or conflicting results when using different diversity metrics.

| Step | Action & Rationale |

|---|---|

| 1 | Identify your primary hypothesis. Are you comparing within-sample diversity (alpha diversity) or between-sample community composition (beta diversity)? Your choice dictates the metric. |

| 2 | Select multiple metrics prospectively. Do not try all metrics after seeing the results. Pre-specify a small set. For alpha diversity, include metrics for richness (e.g., Observed ASVs) and evenness (e.g., Shannon index). For beta diversity, include both abundance-based (e.g., Bray-Curtis) and phylogeny-aware (e.g., UniFrac) metrics [1]. |

| 3 | Perform power calculations for each metric. Use pilot data to calculate the effect size and required sample size for each pre-selected metric. This reveals which metrics are most sensitive for your specific study [1]. |

| 4 | Report all pre-specified metrics. In your manuscript, transparently report the results for all metrics you planned to use, not just the ones that gave significant results. This prevents publication bias [1]. |

Problem: Low statistical power due to measurement error and technical noise.

| Step | Action & Rationale |

|---|---|

| 1 | Acknowledge the error. Intuitive estimators of microbial relative abundances are known to be biased. Technical variations from DNA extraction, sequencing depth, and species-specific detection effects introduce significant noise [28]. |

| 2 | Incorplicate error into your model. Use statistical methods designed to model measurement error. These methods can estimate true relative abundances, species detectabilities, and cross-sample contamination simultaneously, leading to more accurate effect size estimates [28]. |

| 3 | Leverage specific experimental designs. Implement study designs that provide the data needed for these models, such as including technical replicates, mock communities (samples with known compositions), and samples processed in batches [28]. |

| 4 | Re-calculate power with corrected estimates. Use the effect sizes and variance estimates from the measurement error model to perform a more realistic power analysis before proceeding with a full-scale study [28]. |

Quantitative Data for Sample Size Planning

Table 1: Sensitivity of Different Beta Diversity Metrics for Sample Size Calculation This table summarizes findings from a power analysis comparison, showing that some metrics are more sensitive than others, directly impacting the required sample size [1].

| Beta Diversity Metric | Basis of Calculation | Relative Sensitivity for Detecting Group Differences | Impact on Sample Size |

|---|---|---|---|

| Bray-Curtis | Abundance-based | High (Most sensitive) | Lower |

| Weighted UniFrac | Abundance-based, Phylogeny-aware | Medium | Medium |

| Jaccard | Presence/Absence | Medium to Low | Higher |

| Unweighted UniFrac | Presence/Absence, Phylogeny-aware | Low | Higher |

Table 2: Essential Research Reagent Solutions for Microbiome Experiments

| Item | Function in Experiment | Key Considerations |

|---|---|---|

| DNA Extraction Kit (e.g., MO BIO Powersoil) | Extracts microbial DNA from samples. | Essential for consistent results; often includes a bead-beating step to lyse robust microorganisms [29]. |

| Sample Collection Swabs (e.g., BBL CultureSwab) | Non-invasive collection of microbial samples from skin, oral, or vaginal surfaces. | Provides a standardized way to collect and transport samples [29]. |

| Stool Collection Kit with Stabilizing Buffer | Enables room-temperature storage and transport of stool samples for at-home collection. | Critical for preserving sample integrity when immediate freezing is not possible [30] [29]. |

| PCR Reagents (e.g., Phusion polymerase) | Amplifies target marker genes (e.g., 16S V4 region) for sequencing. | High-fidelity polymerase is recommended to reduce amplification errors [29]. |

| Sequencing Platform (e.g., Illumina MiSeq) | Generates the raw sequence data used for microbiome analysis. | The choice of primers (e.g., 16S V4 vs. V1-V3) and sequencing depth (reads/sample) must align with the study goals [29]. |

Experimental Protocol: Sample Identity Verification Using Host DNA

Purpose: To identify sample processing errors, such as mislabeling or mix-ups, in host-associated microbiome studies by leveraging host genetic profiles from metagenomic data [27].

Methodology:

- Data Generation: Perform whole-metagenome sequencing on the microbiome samples. Independently, obtain high-confidence genotype data for all host subjects (e.g., via microarray).

- Host SNP Extraction: Bioinformatically infer host Single Nucleotide Polymorphisms (SNPs) from the metagenomic sequencing data.

- Sample-to-Donor Matching: Statistically compare the metagenomics-inferred host SNPs to the independent genotype data for each subject. A high concordance indicates a correct sample-to-donor match.

- Cross-Sample Comparison: Compare the metagenomics-inferred host SNPs between all samples to identify any that genetically match each other, which would indicate multiple samples from the same donor or a sample duplicate.

- Metadata Integration: Combine the genetic matching results with experimental metadata (e.g., sample collection date, processing batch) to confirm the identity of the error.

Sample Identity Verification Workflow

Conceptual Framework: Microbiome in Statistical Models

The role the microbiome plays in your hypothesis dictates the approach to power analysis and model design. The following diagram illustrates the three primary conceptual models.

Microbiome Roles in Statistical Models

Choosing Your Tools: Methodologies and Software for Power Calculation

Power Analysis for Alpha Diversity Metrics (e.g., Shannon, Faith PD, Chao1)

Frequently Asked Questions (FAQs) and Troubleshooting Guides

FAQ 1: Why is power analysis crucial for microbiome studies, and what are the consequences of an underpowered study?

Answer: A priori power and sample size calculations are essential to appropriately test hypotheses and obtain valid, generalizable conclusions from clinical studies. In microbiome research, underpowered studies are a significant cause of conflicting and irreproducible results [26] [1].

An underpowered study has a low probability of detecting a true effect, leading to a high rate of false negatives (Type II errors). This wastes resources and can stall scientific progress by failing to identify genuine biological signals. Performing power analysis before conducting experiments ensures that your study is designed with a sample size sufficient to reliably detect the effect you are investigating [1].

FAQ 2: Which alpha diversity metric should I use for my power analysis?

Answer: The choice of alpha diversity metric can significantly impact your power analysis and sample size requirements. There is no single "best" metric, as each captures different aspects of the microbial community. It is recommended to use a comprehensive set of metrics that represent different categories to ensure a robust analysis [31] [1].

The table below groups common alpha diversity metrics by category and describes what they measure and their key characteristics.

| Category | Example Metrics | What It Measures | Key Considerations for Power Analysis |

|---|---|---|---|

| Richness | Observed ASVs, Chao1, ACE [31] | Number of distinct taxa in a sample [1]. | Sensitive to rare taxa. Chao1 estimates true richness by accounting for unobserved species using singletons and doubletons [31] [1]. |

| Phylogenetic Diversity | Faith's PD (Faith PD) [31] [1] | Sum of branch lengths on a phylogenetic tree for all observed taxa in a sample [1]. | Incorporates evolutionary relationships. Its value is strongly influenced by the number of observed features (ASVs) in a sample [31]. |

| Information / Evenness | Shannon Index [31] [1] | Combines richness and the evenness of species abundances [1]. Higher evenness increases diversity. | A common, general-purpose metric. Sensitive to changes in the abundance distribution of common and rare taxa [31]. |

| Dominance | Simpson, Berger-Parker [31] | Degree to which the community is dominated by one or a few taxa [31]. | Berger-Parker is easily interpretable as the proportional abundance of the most abundant taxon [31]. |

Troubleshooting Tip: Be aware that the structure of your data influences which alpha diversity metrics are most sensitive to differences between groups. There is no one-size-fits-all answer, so testing multiple metrics from different categories in your power analysis is the safest approach [1].

FAQ 3: I have a limited budget for my pilot study. What is the minimum information needed to perform a power analysis for alpha diversity?

Answer: To perform a power analysis for alpha diversity metrics, you need to estimate the effect size you expect to see between your study groups. This requires pilot data or estimates from previously published literature for the following parameters for each group:

- Mean alpha diversity value (e.g., mean Shannon index).

- Variance or standard deviation of the alpha diversity values.

With these estimates, you can use standard power analysis formulas or software to calculate the required sample size. For a two-group comparison (e.g., t-test), the effect size is often expressed as Cohen's d, which is the difference in means divided by the pooled standard deviation [1].

FAQ 4: How do I perform a power analysis for alpha diversity metrics in practice?

Answer: The following workflow outlines the key steps for conducting a power analysis for alpha diversity metrics, from data collection to sample size determination.

Power Analysis Workflow

Step 1: Obtain or Generate Pilot Data Use data from a small-scale pilot study, reanalyze data from a public repository, or extract summary statistics from published literature. When reusing public data, be mindful of equitable data practices. A proposed Data Reuse Information (DRI) tag with an ORCID indicates the data creator's preference for contact before reuse [32].

Step 2: Calculate Alpha Diversity Metrics Using a bioinformatics pipeline (e.g., in R), calculate a comprehensive set of metrics from different categories for all samples in your pilot data, as detailed in the table above [33] [31].

Step 3: Estimate Effect Size For each alpha diversity metric of interest, calculate the difference in means and the pooled standard deviation between your pilot groups to estimate the effect size [1].

Step 4: Run Power Analysis Input the estimated effect size, your desired statistical power (typically 0.8 or 80%), and significance level (typically 0.05) into a power analysis tool to calculate the required sample size per group [1].

Step 5: Determine Final Sample Size The power analysis will output a required sample size per group. Use the largest sample size requirement from among the key alpha diversity metrics you plan to report.

FAQ 5: My power analysis suggests I need an unreasonably large sample size. What are my options?

Answer: A large required sample size often indicates a small effect size. Consider these options:

- Re-evaluate Your Effect Size: Is the effect size from your pilot data or literature clinically or biologically relevant? A very small effect may not be meaningful.

- Increase the Effect Size, If Possible: Refine your intervention or select more homogeneous subject groups to reduce within-group variance.

- Use a More Sensitive Metric: Beta diversity metrics (e.g., Bray-Curtis) are often more powerful for detecting community-level differences than alpha diversity metrics. Consider making beta diversity a primary outcome [1].

- Report Transparently: If resources are fixed, calculate the statistical power you will have for a range of effect sizes and report this in your manuscript to provide context for your findings [1].

FAQ 6: How can I avoid "p-hacking" when comparing multiple alpha diversity metrics?

Answer: To protect against p-hacking (trying many tests until you find a significant result), pre-specify your analysis plan.

- Define Primary and Secondary Outcomes: Before conducting the experiment, designate one or two alpha diversity metrics as your primary outcomes for hypothesis testing. All other metrics should be clearly labeled as exploratory or secondary.

- Publish a Statistical Analysis Plan: Describe the primary outcomes, the statistical tests you will use, and how you will handle multiple comparisons. Adhering to a pre-registered plan ensures the integrity of your results [1].

| Item | Function / Application |

|---|---|

| R Statistical Software | Open-source environment for statistical computing and graphics. Essential for calculating diversity metrics, performing power analysis, and creating visualizations [18]. |

| Power Analysis Packages (R) | Specific R packages (e.g., pwr) are designed to calculate sample size and power for various statistical tests, including t-tests and ANOVA, which are used for alpha diversity comparisons. |

| Bioinformatics Pipelines | Tools like the R microeco package or QIIME 2 provide standardized workflows for processing raw sequencing data, calculating diversity metrics, and differential abundance testing [33] [34]. |

| Pilot Data | Data from a small-scale preliminary study or a carefully selected public dataset. Serves as the empirical foundation for estimating the effect size required for power analysis. |

| Digital Object Identifiers (DOIs) for Data | Making datasets citable with DOIs provides a mechanism to credit data creators, facilitating equitable data reuse and collaboration [32]. |

Power Analysis for Beta Diversity and PERMANOVA using Pairwise Distances (e.g., UniFrac, Bray-Curtis)

Frequently Asked Questions (FAQs) and Troubleshooting Guides

What is the relationship between beta-diversity, pairwise distances, and PERMANOVA in microbiome studies?

In microbiome studies, beta-diversity describes the variation in microbial community composition between samples. This complex relationship is often simplified into a statistical workflow for analysis.

Workflow Description: Analysis begins with Microbiome Samples (e.g., 16S rRNA sequence data). A Pairwise Distance Matrix is computed using metrics like Bray-Curtis or UniFrac to quantify differences between every sample pair [35] [36]. PERMANOVA uses this matrix to test if group compositions differ significantly by partitioning variance into between-group (SSA) and within-group (SSW) sums of squares [6]. The test result's Statistical Power—the probability of detecting a true effect—is influenced by effect size, sample size, within-group variation, and significance level (α) [1]. Understanding this relationship is crucial for planning studies with adequate sample sizes.

How do I choose the right beta-diversity metric (Bray-Curtis vs. UniFrac) for my power analysis?

The choice of beta-diversity metric significantly impacts power and sample size requirements, as different metrics are sensitive to different aspects of community difference [1].

| Metric | Basis of Calculation | Sensitivity in Power Analysis | Recommended Use Case |

|---|---|---|---|

| Bray-Curtis | Abundance-based, without phylogeny | Often the most sensitive for detecting differences; can lead to lower required sample sizes [1]. | Detecting changes in abundant taxa, regardless of phylogenetic relationships. |

| Unweighted UniFrac | Presence/Absence, with phylogeny | Sensitive to changes in rare, phylogenetically distinct lineages [37]. | Detecting changes in community membership (which taxa are present) when evolutionary relationships are important. |

| Weighted UniFrac | Abundance-based, with phylogeny | Sensitive to changes in abundant, phylogenetically distinct lineages [37]. | Detecting changes in the relative abundance of taxa when evolutionary relationships are important. |

Troubleshooting Guide: If you find your PERMANOVA results are not significant despite a suspected effect:

- Check Metric Consistency: Ensure the chosen metric aligns with your biological hypothesis. For example, if you expect changes in abundant taxa, Bray-Curtis or Weighted UniFrac are more appropriate.

- Avoid P-hacking: Do not run multiple metrics and only report the one that gives a significant p-value. To prevent this, pre-specify your primary beta-diversity metric in your statistical analysis plan [1].

My PERMANOVA result is significant (low p-value), but I don't see clear clustering in my PCoA plot. Why?

This is a common occurrence and does not necessarily invalidate your PERMANOVA result.

- Cause: PERMANOVA operates on the full distance matrix, considering all pairwise differences. In contrast, a Principal Coordinates Analysis (PCoA) plot is a low-dimensional visualization (typically 2-3 axes) that may not capture all the variance present in the original data [38]. A significant PERMANOVA with weak visual clustering can indicate a real but subtle effect spread across multiple dimensions.

- Solution:

- Check Explained Variance: Look at the percentage of variance explained by the PCoA axes you are visualizing. It is often relatively low in microbiome studies; the remaining variance explained by higher axes may contain the group differences detected by PERMANOVA [38].

- Conduct Pairwise Tests: Use the

--p-pairwiseoption in QIIME2 or similar functions in R to perform PERMANOVA between specific pairs of groups. Sometimes, a significant overall test is driven by strong differences between just one or two pairs of groups, which might be more visible in a PCoA plot that includes only those groups [38]. - Consider Other Factors: The absence of clear clustering could also be due to high within-group variation or a small effect size.

What are the practical methods for performing power analysis for PERMANOVA?

Performing power analysis for PERMANOVA is more complex than for univariate tests because it depends on the entire distribution of pairwise distances. Below are two primary methodological approaches.

Experimental Protocol 1: Simulation-Based Power Analysis Using micropower (in R)

This method, outlined by Kelly et al. (2015), involves simulating distance matrices that reflect pre-specified within-group variation and effect sizes [6].

Step-by-Step Workflow:

- Estimate Population Parameters: Use pilot data or data from public repositories (e.g., American Gut Project) to estimate the distribution of within-group pairwise distances for your microbiome habitat and beta-diversity metric of interest.

- Simulate Distance Matrices: Use the

simulate_dissimilaritiesfunction (or equivalent) to generate multiple distance matrices. The function allows you to:- Model within-group distances based on your estimated parameters.

- Incorporate a pre-specified effect size (ω²) by perturbing the distances to create between-group differences [6].

- Analyze Simulated Matrices: Run PERMANOVA on each simulated distance matrix.

- Calculate Empirical Power: The power is calculated as the proportion of these simulated tests where the p-value is less than your significance level (e.g., α = 0.05).

Experimental Protocol 2: Effect Size Extraction and Power Calculation Using Evident

The Evident tool streamlines power analysis by calculating effect sizes directly from large existing datasets, which can then be used for sample size estimation [10] [7].

Step-by-Step Workflow:

- Installation: Install

Evidentas a standalone Python package (pip install evident) or as a QIIME 2 plugin [7]. - Calculate Effect Sizes: For a metadata variable of interest (e.g., "disease state"),

Evidentcomputes the effect size on a diversity measure. - Perform Power Analysis: Using the calculated effect size,

Evidentcan generate a power curve showing the relationship between sample size and statistical power for different significance levels.

Example QIIME 2 Command for Univariate Power Analysis with Evident:

This command analyzes how sample size affects the power to detect differences in Faith's PD between groups defined in the "disease_state" column [7].

| Tool / Resource | Function / Description | Relevance to Power Analysis |

|---|---|---|

| QIIME 2 (q2-diversity) | A plugin that performs core diversity analyses, including beta-diversity and PERMANOVA (via beta-group-significance). |

Generates the essential beta-diversity distance matrices and initial PERMANOVA results from sequence data [39]. |

| R Statistical Software | A programming environment for statistical computing. | The primary platform for running advanced power analysis packages like micropower and for custom simulation scripts [6]. |

micropower R Package |

An R package designed specifically for simulation-based power estimation for PERMANOVA in microbiome studies [6]. | Allows researchers to model within- and between-group distance distributions to estimate power or necessary sample size. |

Evident Python Package/QIIME 2 Plugin |

A tool for calculating effect sizes from existing large datasets and performing subsequent power calculations [10] [7]. | Enables data-driven power analysis by mining effect sizes from databases like the American Gut Project, AGP, TEDDY, or a researcher's own pilot data. |

| Large Public Databases (e.g., AGP, HMP) | Large, publicly available microbiome datasets with associated metadata [35] [10]. | Serve as a source of realistic within- and between-group distance distributions for parameterizing power simulations when pilot data is unavailable. |

The Dirichlet-Multinomial (DMN) model is a fundamental parametric approach for analyzing overdispersed multivariate categorical count data, frequently encountered in microbiome research [40]. It extends the standard multinomial distribution by allowing probability vectors to vary according to a Dirichlet distribution, thereby accommodating the extra variation (overdispersion) commonly observed in datasets such as 16S rRNA sequencing counts [41] [42]. This model is crucial for accurate differential abundance analysis and power calculations, as it provides a more realistic fit for the inherent variability of microbial community data [2].

Key Concepts and Definitions

- Overdispersion: A phenomenon where the variance in the data is larger than what a standard multinomial model would predict. This is a hallmark of microbiome count data [42].

- Concentration Parameter (

concorα₀): This parameter, often denoted asα₀ = Σαₖ, controls the degree of overdispersion. A smallerα₀indicates higher overdispersion, while asα₀approaches infinity, the DMN model reduces to a standard multinomial distribution [41] [40]. - Expected Fraction (

frac): A vector representing the mean expected proportions for each taxon across all samples [41].

Troubleshooting Common Issues

FAQ: My model fitting is slow or fails to converge with my large microbiome dataset. What can I do?

High-dimensional microbiome data (many taxa) can be computationally challenging.

- Solution: Filter out low-abundance or low-prevalence taxa before analysis to reduce dimensionality. For instance, you can keep only the core taxa that are prevalent at 0.1% relative abundance in 50% of the samples [43].

- Solution: Utilize optimized computational algorithms for calculating the DMN log-likelihood, as conventional methods can be unstable and slow, especially with high-count data from deep sequencing [42].

FAQ: How do I know if the Dirichlet-Multinomial model is a better fit for my data than a simple Multinomial model?

A model comparison can be performed using information criteria.

- Solution: Fit both a Multinomial and a DMN model to your data. Compare them using information criteria such as AIC (Akaike Information Criterion) or BIC (Bayesian Information Criterion). The model with the lower value is generally preferred. The DMN model will typically show a better fit (lower AIC/BIC) for overdispersed data [43].

FAQ: I've heard that power is a major concern in microbiome studies. How does the choice of model affect my power analysis?

Using an inappropriate model that does not account for overdispersion can severely inflate Type I errors and lead to underpowered studies.

- Solution: When calculating sample size and power, ensure your method accounts for overdispersion. Beta diversity metrics like Bray-Curtis, used in conjunction with models that handle overdispersion (like DMN), are often more sensitive for detecting differences between groups than alpha diversity metrics. Always report the diversity metrics used in your power calculations [1].

FAQ: The numerical computation of the DMN likelihood is unstable, especially when the overdispersion parameter is near zero. How is this resolved?

This is a known issue when calculating the log-likelihood using standard functions, which can become unstable as the overdispersion parameter ψ approaches zero [42].

- Solution: Implement recently developed algorithms that use a novel parameterization of the log-likelihood function. These methods, often based on truncated series of Bernoulli polynomials, ensure a smooth transition from the DMN to the Multinomial distribution and provide accurate results without long runtimes [42].

Essential Experimental Protocols

Protocol 1: Fitting a Dirichlet-Multinomial Model in R

This protocol outlines the steps to fit a DMN model to a microbiome count table and determine the optimal number of components for community typing [43].

- Step 1 - Data Preparation: Format your OTU or ASV count table into a samples-by-taxa matrix.

- Step 2 - Model Fitting: Use the

DirichletMultinomialpackage in R to fit multiple DMN models with different numbers of components (k). - Step 3 - Model Selection: Calculate the model fit criteria (e.g., Laplace, AIC, BIC) for each fitted model and select the model with the smallest value.

- Step 4 - Result Interpretation: Extract the mixture weights (

pi) and the overdispersion parameter (theta) for the best model using themixturewt()function. Assign samples to community types and inspect the top taxonomic drivers for each cluster [43].

Protocol 2: Simulating Dirichlet-Multinomial Data

Simulating data from the DMN distribution is valuable for method validation and power calculations [41].

- Step 1 - Define Parameters: Set the true parameters: the number of categories (

k, e.g., tree species or bacterial taxa), the number of observations (n, e.g., forests or samples), the total count per observation (total_count), the expected fraction vector (true_frac), and the concentration parameter (true_conc). - Step 2 - Draw Probabilities: For each observation

i, simulate a probability vectorp_ifrom a Dirichlet distribution:p_i ~ Dirichlet(α = true_conc * true_frac). - Step 3 - Generate Counts: For each observation

i, simulate a vector of counts from a Multinomial distribution:counts_i ~ Multinomial(n = total_count, p = p_i).

The following workflow diagram illustrates this data generation process:

Research Reagent Solutions

Table 1: Key Software and Packages for Dirichlet-Multinomial Analysis

| Tool Name | Function / Use-Case | Platform / Language |

|---|---|---|

DirichletMultinomial [43] |

Community typing (clustering) using Dirichlet Multinomial Mixtures (DMM). | R |

dirmult [42] |

Fitting DMN models and calculating likelihoods. | R |

VGAM [42] |

Fitting a wide range of vector generalized linear models, including the DMN. | R |

PyMC [41] |

Probabilistic programming for building complex Bayesian models, including custom DMN formulations. | Python |

MicrobiomeAnalyst [25] |

A comprehensive web-based platform for microbiome data analysis, including statistical and visualization tools. | Web |

Critical Considerations for Power Analysis

Integrating the DMN model into power analysis is essential for robust study design. The table below summarizes how different factors influence your power calculations.

Table 2: Factors Influencing Power in Microbiome Studies

| Factor | Impact on Power & Analysis | Recommendation |

|---|---|---|

| Overdispersion | Higher overdispersion requires a larger sample size to achieve the same power [40] [42]. | Use the DMN model to estimate the overdispersion parameter from pilot data for accurate sample size calculation. |

| Diversity Metric | Beta diversity metrics (e.g., Bray-Curtis) are often more sensitive to group differences than alpha diversity metrics, affecting required sample size [1]. | Pre-specify in your statistical analysis plan whether alpha or beta diversity is the primary outcome. Avoid "p-hacking" by trying multiple metrics. |

| Effect Size | The defined effect size (e.g., Cohen's d for alpha diversity) is highly sensitive to the chosen metric [1]. | Use pilot data to estimate the effect size for your specific metric of interest. |

| Sequencing Depth | Insufficient sequencing depth may lead to false zeros, increasing sparsity and affecting abundance estimates [2]. | Perform rarefaction or use normalization methods to account for variable library sizes. |

Frequently Asked Questions (FAQs)

Q1: Why is power analysis particularly crucial for microbiome studies compared to other types of clinical studies? Microbiome data have intrinsic features that complicate classic sample size calculation, including high dimensionality, sparsity (many zero counts), compositionality, and phylogenetic relationships between taxa. Power analysis ensures that your study is designed with a sufficient sample size to detect these specific types of effects, reducing the risk of false negatives and improving the reliability and generalizability of your findings [26] [1].

Q2: I am planning a study to see if a new drug alters the gut microbiome. Should I base my sample size on alpha diversity, beta diversity, or both? It is recommended to base your primary sample size calculation on beta diversity metrics. Empirical evidence shows that beta diversity metrics are generally more sensitive for detecting differences between groups (e.g., treatment vs. control) than alpha diversity metrics. You should calculate sample size for the beta diversity metric that best aligns with your biological hypothesis. However, also plan to report multiple diversity metrics to provide a comprehensive view of your results and avoid potential bias [1].

Q3: What is the practical difference between the Bray-Curtis dissimilarity and UniFrac distance for my power analysis? The choice depends on what aspect of the microbiome you expect the drug to affect. The table below summarizes the core differences. You may need to calculate sample sizes for both if your hypothesis is not specific.

| Metric | Key Characteristics | Best Suited For |

|---|---|---|

| Bray-Curtis Dissimilarity [44] [1] | Quantifies compositional dissimilarity based on taxon abundance. Gives more weight to common species. | Detecting shifts in the abundance of common, dominant taxa. Often the most sensitive metric, leading to lower required sample sizes [1]. |

| Unweighted UniFrac [44] | Incorporates phylogenetic relationships and uses only presence/absence of taxa. | Detecting the introduction or loss of taxa, especially rare species. |

| Weighted UniFrac [44] | Incorporates phylogenetic relationships and the abundance of taxa. | Detecting changes that reflect both the identity and abundance of taxa, reducing the contribution of rare species. |

Q4: My pilot data has a very small sample size. How can I reliably estimate the effect size for a power analysis? With a very small pilot study, estimating a precise effect size is challenging. In such cases, it is advisable to perform a sensitivity analysis. Instead of calculating a single sample size, you calculate the required sample size for a range of plausible effect sizes. This allows you to present a realistic scenario for what effects your study will be able to detect, given resource constraints. Furthermore, you should base your effect size estimate on the same diversity metric you plan to use in your final analysis [1].

Troubleshooting Guide: Sample Size Calculation

Problem: Inconsistent sample size estimates when using different diversity metrics.

- Cause: This is expected. Different alpha and beta diversity metrics are mathematically distinct and capture different aspects of the microbial community (e.g., richness vs. evenness; abundance vs. phylogeny). They will naturally have different sensitivities to the effect you are studying [1].

- Solution: Pre-specify your primary outcome metric in your statistical analysis plan before conducting the power analysis. Base your final sample size decision on this primary metric. The workflow in the decision tree below is designed to help you make this choice systematically.

Problem: After collecting data, my PERMANOVA result for beta diversity is not significant, but my power analysis suggested it would be.

- Cause 1: Overestimated Effect Size. The effect size used in your power calculation, often derived from a small pilot study or published literature, may have been larger than the true effect in your population [1].

- Solution: Re-calculate the observed effect size from your collected data. Use this more accurate estimate for planning future studies.

- Cause 2: High Variability. Your actual samples may have higher within-group variability than anticipated, making it harder to detect a significant difference between groups.

- Solution: In your analysis, check for and control for confounding factors (e.g., BMI, diet, medication) that can increase variability in microbiome data [44].

Problem: I am using the Aitchison distance for my compositional data, but power calculation tools for it are scarce.

- Cause: The Aitchison distance, which uses a centered log-ratio (CLR) transformation, is a powerful but complex metric. Standard power calculation software may not implement it directly [45].

- Solution: Use a permutation-based power analysis. This involves using your pilot data to simulate new datasets under a specific effect size and then running the statistical test (e.g., PERMANOVA on Aitchison distance) on thousands of simulated datasets to estimate the proportion of times a significant effect is found (the power) [1].

The Scientist's Toolkit: Essential Reagents & Materials

| Item | Function in Microbiome Research |

|---|---|

| DNA Stabilization Buffer (e.g., AssayAssure, OMNIgene·GUT) | Preserves microbial DNA at room temperature for a limited period when immediate freezing at -80°C is not feasible, critical for field studies [46]. |

| Sterile Collection Kits | Provides a standardized, contamination-free method for sample collection (e.g., stool, urine). Using sterile materials is vital to prevent contamination, especially in low-biomass samples like urine [46]. |

| DNA Isolation Kits | Extracts microbial DNA from samples. The choice of kit can impact DNA yield and quality, but many kits produce comparable results in downstream sequencing and diversity analysis [46]. |

| 16S rRNA Gene Primers (e.g., V1V2, V4) | Targets a specific variable region of the 16S rRNA gene for amplification prior to sequencing. Primer selection can influence species richness estimates and susceptibility to human DNA contamination [46]. |

Decision Tree for Calculation Method Selection

This decision tree will guide you in selecting the appropriate statistical metric for your hypothesis, which is the critical first step in performing a valid power and sample size calculation.

Experimental Protocol: Power Analysis for a Beta Diversity Metric

This protocol outlines the steps to perform a power analysis for a beta diversity metric using pilot data, based on a permutation-based method [1].

1. Define Hypothesis and Metric:

- Clearly state your null and alternative hypotheses (e.g., "The gut microbiome beta diversity of the treatment group is different from the control group").

- Select your primary beta diversity metric (e.g., Bray-Curtis) using the decision tree above.

2. Acquire or Generate Pilot Data:

- Obtain a dataset with a similar population and sampling method as your planned study. This dataset should have at least a few samples per group. The effect size and variability observed in this pilot data will form the basis of your calculation.

3. Calculate the Observed Effect Size:

- For beta diversity, the effect size is not a single number like Cohen's d. Instead, it is embedded in the data structure. The permutation test will simulate data based on the effect observed in your pilot data.

4. Set Power Analysis Parameters:

- Significance Level (α): Typically set to 0.05.

- Desired Power (1-β): Typically set to 0.8 or 80%.

- Number of Permutations: Set a large number (e.g., 1,000 or 5,000) to ensure stable estimates.

5. Run the Permutation-Based Power Analysis:

- This is typically done using a script in R or Python. The general procedure is:

- Input: Your pilot distance matrix and group labels.

- For each proposed sample size (N):

- Simulate a new dataset by randomly drawing N samples from your pilot data with replacement (bootstrapping), maintaining group proportions.

- Optionally, introduce a small, systematic shift to the treatment group's data to represent your minimum effect of interest.

- Perform the statistical test (e.g., PERMANOVA) on the simulated data.

- Record whether the test result is significant (p-value < α).

- Repeat this process thousands of times for the same N.

- Power Calculation: The power for sample size N is the proportion of iterations that yielded a significant result.

6. Determine Sample Size:

- Plot the calculated power against the proposed sample sizes. The smallest sample size that meets or exceeds your desired power threshold (e.g., 0.8) is your recommended sample size.

Troubleshooting Guides & FAQs

R Package:micropower

Q1: I am getting unexpected results when simulating distance matrices with micropower. How can I ensure my within-group mean and standard deviation are accurately modeled?

A: This often arises from a mismatch between the subsampling (rarefaction) level or the number of OTUs used in simulations and your actual data. micropower relies on a two-step calibration process [47]:

- Determine Rarefaction Level: Use

hashMeanto simulate OTU tables across a range of subsampling levels (rare_levels). The correct level is the one where the mean of the simulated pairwise distances matches the mean from your real within-group distance matrix [47]. - Determine OTU Number: With the rarefaction level fixed, use simulations to find the number of OTUs that produces a standard deviation of pairwise distances matching your real data [47].

Workflow Diagram: micropower Parameter Calibration

Q2: My power analysis with micropower seems to underestimate the power. What could be the cause?